The Future of Human-Machine Interaction

The rise of AI avatars and AI replicas isn't just a trend, but is the future of interaction

For most of computing history, our interactions with machines have been rigid and mechanical — clicking, typing, navigating endless screens and dropdowns. Then came the mobile shift, unlocking new modalities like voice. Assistants like Siri offered a glimpse of more natural interactions, and while voice applications are rapidly gaining momentum, we’re now at the edge of something potentially far bigger.

We are entering a new era of human–machine interaction. One that’s multimodal, emotionally intelligent, and deeply personal. Video, voice, memory, and emotion are converging to create AI Avatars or AI Replicas that feel less like tools and more like teammates, collaborators, and even companions.

Imagine an AI that looks you in the eye, smiles, remembers your preferences, and responds not just to your words, but to your emotions — all in real-time. These aren’t static avatars; they’re dynamic, expressive, and increasingly lifelike. These AI Replicas are starting to become a part of the fabric of how we work, learn, and connect across industries like coaching, healthcare, elder care, customer support, and more.

In this post, we’ll explore the technological shifts and behavioral changes driving the next era of human-machine interaction. We’ll focus first on the infrastructure and enabling layers, with a follow-up post diving into the applications being built on top.

We recommend interacting with Tavus’ AI replica, Charlie, to get a feel for an AI replica that feels awfully human-like. Let’s dig in!

Why Now: A Perfect Storm of Technological and Behavioral Change

The timing couldn’t be more compelling. Text-based chatbots like ChatGPT brought personalized, real-time support into our daily routines. Voice assistants added a layer of natural, hands-free interaction. Now, we’re entering the next phase: AI Avatars and Replicas that don’t just assist, but act as collaborators and companions. To truly work alongside us, they must interact like us — with empathy, context, and personality. As humans, we crave connection, so it’s no surprise we now expect our digital tools to evolve from transactional to relational.

We believe a series of foundational shifts, both technological and behavioral, are converging to redefine how we interact with intelligent systems. These shifts make AI Avatars and Replicas not only possible, but increasingly desirable.

1. Breakthroughs Across the Tech Stack:

Advancements across multiple layers of the stack are enabling the rise of AI avatars that feel increasingly lifelike and responsive. Here are a few of the key layers of the stack that have enabled this to occur at scale:

Real-Time Video Infrastructure: Technologies like WebRTC now support seamless, low-latency video conferencing, allowing real-time, end-to-end visual interactions in distributed environments.

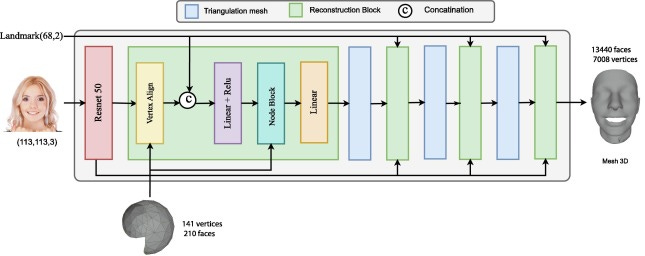

3D Face Recreation: With just a short video scan, systems like Tavus and HeyGen can now clone human faces with high fidelity and also achieve pretty accurate lip-sync, bringing avatars closer to photorealistic presence. This was historically very hard to do and most of the first generation players had ‘talking-head’ style avatars, which felt more unrealistic and less human like.

Perception Systems: Beyond traditional computer vision, modern systems can continuously track gestures, micro-expressions, eye contact, and motion. This enables avatars to interpret and respond to real-time visual cues. These perception systems also allow the AI avatars to understand what else may be happening in nearby surroundings.

Speech Technology: High-quality TTS (text-to-speech) and STT (speech-to-text) systems now enable fluid, natural voice interaction that feels synchronous and expressive (e.g. Deepgram and ElevenLabs). The ability to do real-time speech technology with no latency enables what feels like a very natural and fluid conversation.

Language Models: LLMs provide the cognitive layer that was previously not possible. Training avatars on real-world context and enabling rich, domain-specific interactions enable them to be much more intelligent. AI avatars now feel thoughtful and informed on topics vs. programed and instructed to only know a handful of items.

2. Persistent Memory:

For AI avatars to feel more ‘human’ and function as trusted co-workers, they must do more than react in the moment. They need memory. Memory has evolved significantly since the early LLMs were released. Newer models have more persistent memory and are identity aware. There has also been an increasing number of new tools and frameworks that enable these AI avatars to remember facts. Avatars of today are evolving from one-off responders to companions that can track context over time.

Within sessions they can manage long-form conversations, revisiting earlier points and adapting as the dialogue unfolds. This represents a significant leap forward from the short memory windows of early systems and is an important detail for many application use cases (e.g., elderly care, personalized coaching).

Across sessions they can recall past interactions, goals, and preferences, retrieving relevant context from previous discussions to personalize future ones.

3. Personality and Emotional Intelligence:

These AI avatars are not just functional, but they are also emotionally attuned. This type of emotional intelligence transforms the user experience from transactional to relational, building trust. They are no longer just talking heads that have one emotion for an entire conversation. Emerging avatars are now learning to signal:

Positive emotions such as care, sensitivity, warmth, and empathy, enthusiasm, and encouragement.

Subtle emotional states through tone, timing, and visual expression (e.g., pausing before delivering some hard news, the same way a human might, or smiling when celebrating success).

4. Intellectual Awareness:

These avatars aren’t just emotionally aware, they’re also intellectually engaged the same way humans are. Powered by LLMs and fine-tuned contextual models, they can quickly understand what you’re trying to learn or accomplish, respond fluidly, and adjust as your needs evolve. They stay up-to-date on current events and topics, reasoning on the fly much like a human would.

Many are also trained with deep domain expertise, enabling them to operate effectively in specialized fields like healthcare, finance, education, and beyond.

5. Deep Personalization:

Personalization will no longer be just about UI layouts or content suggestions but it will shape how your AI avatar sounds, what it is wearing, what it remembers, how it reacts, and so much more. By connecting into existing tools and knowledge systems, these AI avatars can surface relevant information from outside the current interaction. Just like humans, they pull in past conversations and shared context to make the present exchange more intelligent and personalized.

There’s clear data showing society’s growing comfort with AI avatars, both animated and more ‘human-like’ forms of them. Character AI, for example, was reported to have 20 million+ monthly active users worldwide. We’re also seeing Delphi and other AI Avatars started to pick up traction, driven by these major technological unlocks.

Infrastructure Layer: A New Stack for Presence

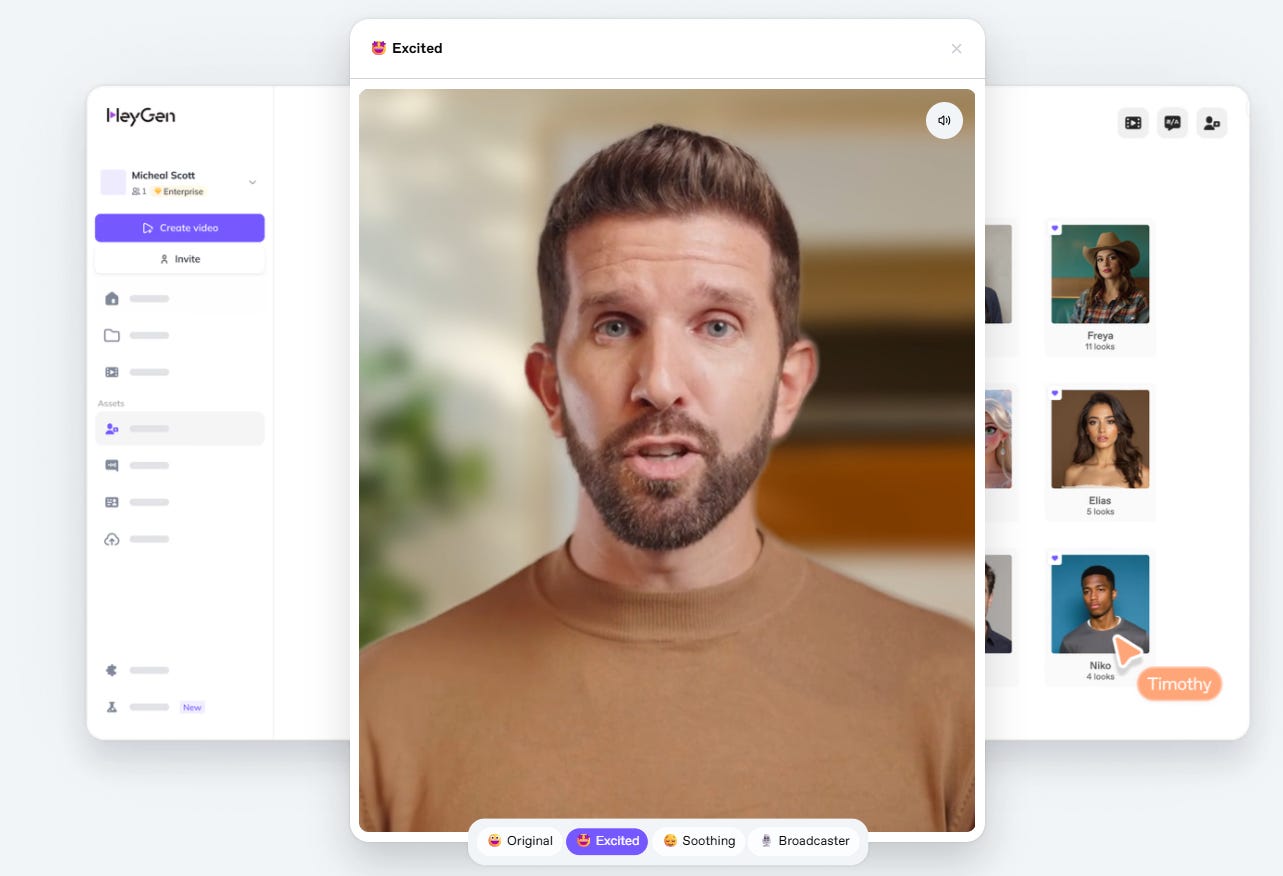

None of this is possible without a robust infrastructure layer. Tools like Tavus, Synthesia, and HeyGen are laying the foundation, but each with different philosophies.

For example, companies like Tavus have taken a developer-first approach. Rather than focusing solely on templated, pre-rendered content, Tavus enables real-time, personalized, video-based communication through simple APIs. This makes it frictionless for developers to embed conversational video into any workflow — from onboarding to telehealth to asynchronous sales outreach.

This flexibility opens the door to a horizontal platform play, where Tavus becomes not just a tool but an infrastructure layer for human-like interaction at scale. It dramatically lowers the barrier to experimentation, and we’re seeing incredible creativity in the developer ecosystem as a result.

Synthesia is another fast-growing company in this space. They’ve build an AI platform to help individuals and businesses turn text into video, helping teams create studio-quality videos with AI avatars and voiceovers in 140+ languages. By using Synthesia, large companies like Zoom, Reuters, and Heineken, can easily create videos for use cases across sales and marketing, localization (translations and dubbing), learning & development, and business operations.

Other video AI infrastructure tools include Sieve, Descript, and TwelveLabs, as well as larger model providers like Sora from OpenAI.

In just the past two years, we’ve seen an incredible set of infrastructure tools for video AI that is helping everyone from creators to developers to large enterprises build dynamic, scalable video AI applications.

Looking Ahead: A More Human Digital World

As multimodal models continue to mature and platforms like Tavus and Synthesia redefine the infrastructure layer, we’re entering an era where the frontier of interface design shifts away from pixels and layouts and toward the nuances of human-to-computer relationships.

The interfaces of the future won’t be confined to screens; they’ll be emotionally intelligent systems that understand context, remember preferences, and adapt behaviorally over time. A great AI won’t just respond; it will intuit. It will know when to offer encouragement, when to challenge assumptions, when to pause, and when to smile. New wearable form factors (like Meta glasses) that allow us to interact with AI in the real-world will also proliferate.

It’s clear that the next leap in HCI will be ambient, embodied, and deeply personal. The question isn't just how we’ll interact with computers, but who they’ll become to us.