The Big 5 And Their AI Stacks

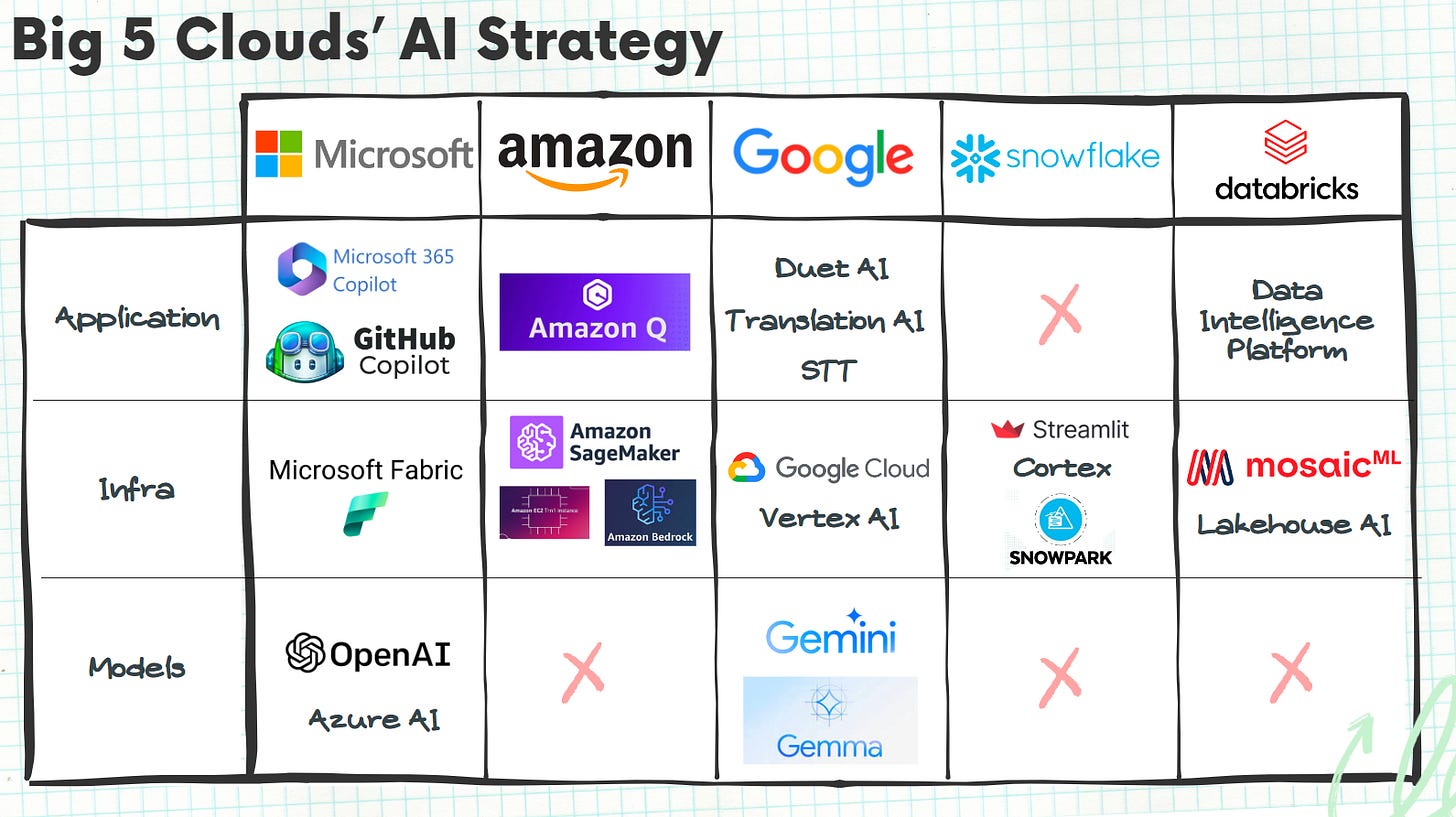

A refresh on where Microsoft, Amazon, Google, Snowflake, and Databricks are building in AI at the model, infrastructure, and application layer

While all eyes were on Nvidia this week during its well-attended GTC conference, we thought it would be a good opportunity to revisit the five major cloud providers (Microsoft, Amazon, Google, Snowflake, and Databricks) and how they are building out their AI stacks. They certainly have not been sleeping at the wheel and have played a key part in the AI wave, but what exactly are they doing at each layer of the stack?

While the Big 5 is vying to capture developer mindshare and uphold their dominance as ‘cloud providers’, they are also diving into pivotal AI initiatives. The Big 5 have established partnerships with industry leaders such as Nvidia, OpenAI, and HuggingFace. They have executed key strategic acquisitions (MosaicML, Neeva), made significant strategic hires, and have announced billion+ parameter LLMs. After all, Microsoft made a splashy announcement earlier this week by hiring Mustafa Suleyman, former Inflection AI CEO and Co-Founder, as Microsoft’s new CEO of Microsoft AI.

In this week’s post, we summarize what the AI stack - at the model, infrastructure, and application layers - currently look like for each of the Big 5. By no means is this post a comprehensive listing of what each of the Big 5 are doing, but rather a highlight of what we view as most critical to each of their stacks. In our next edition, we’ll dig into the key insights and learnings from each.

Let’s dig in.

Microsoft:

Microsoft is making it easy to build, scale, and deploy multimodal generative AI experiences with AzureAI (powered by OpenAI) and also pushing out new AI Apps for employees like Co-Pilot 365 and GitHub. They are arguably the only one of the Big 5 that is focused on each layer of the stack (model > infra > apps).

Model Layer:

OpenAI: Microsoft’s investment & partnership with OpenAI allows them to have supercomputing at scale, new AI-powered experiences, and is OpenAI’s exclusive cloud provider.

AzureOpenAI: Provides businesses and developers with high-performance AI models, such as GPT-3.5, Codex and DALL∙E 2, at production scale with industry-leading uptime.

Infrastructure Layer:

AzureAI: Allows companies to build intelligent applications at enterprise scale leveraging the the Azure AI portfolio of tools.

Microsoft Fabric: Every intelligent application starts with data at the core, which is why Fabric has become key to MSFT. With Fabric, users can directly apply AI to their data, regardless of its location. OneLake consolidates data from diverse sources, eliminating silos and providing a unified view. Azure's OpenAI seamlessly integrates with Microsoft Fabric, offering advanced AI capabilities. This empowers users to leverage generative AI for insightful discoveries and conversational language use in various data tasks.

App Layer:

Office Co-pilot 365: Microsoft's Copilot is an advanced AI tool that seamlessly integrates into Microsoft Fabric, allowing users to harness generative AI for insightful discoveries and conversational language use across diverse data tasks.

GitHub Co-pilot: GitHub Copilot is an AI-powered coding assistant that helps developers write code faster and with fewer errors.

Other Relevant: Microsoft Teams, Microsoft Designer, AI-powered search in Bing and Edge

Amazon:

Amazon has not officially announced its own LLM, but has primarily concentrated on partnering with other AI model startups for such offerings. While they have made significant advancements at the infrastructure layer, they have placed less emphasis on the application layer, except for offering essential apps that we consider as table stakes.

Model Layer:

None today. Amazon does not have their own Foundation or LLM today, but they are rumored to be working on an alleged 2 trillion parameter model, known as Olympus.

Infrastructure Layer:

Amazon Bedrock: Fully managed service offering a diverse selection of high-performing foundation models from leading AI startups (Anthropic, Stability, AI21, etc.) and Amazon. Bedrock is accessible through an API and offers capabilities for experimenting, evaluating, customizing with data, and building GenAI apps.

Amazon Sagemaker. Amazon Sagemaker is a fully managed service that enables developers and data scientists to build, train, and deploy machine learning models at scale. Sagemaker Hyperpod is an even more recent announcement by Amazon that is designed to optimize training costs and reduce training times for deep learning models by automatically selecting the optimal infrastructure and distributing training across multiple instances. This feature helps users achieve faster training times and better cost efficiency when training large-scale deep learning models on AWS Sagemaker.

Trainium2: Offers faster training performance and more memory capacity, and Amazon EC2 capacity blocks for ML, allowing customers to reserve GPUs for future use.

App Layer:

Amazon Q: Amazon's counterpart to ChatGPT that is seamlessly integrated into AWS to provide secure access to enterprise data stored on the platform. Noteworthy about Q is its incorporation of early elements of AWS's "next-generation developer experience," assisting users in setting up infrastructure, writing and deploying code, and managing governance.

Google:

While Google has been building AI products for over a decade, and was heralded for its forward-looking vision in acquiring Deepmind in 2014, they were caught flat-footed by the arrival of ChatGPT and the current AI wave. In the past few months, they’ve attempted to retrench and release highly capable closed-source and open-source models to keep up with OpenAI, Microsoft, Meta, and others. It remains to be seen if Google’s late attempt to reassert itself as a key player in AI will be enough to retain top talent and fend off both incumbent and startup competitors (e.g. Perplexity).

Model Layer:

Gemini: Publicly launched in December 2023, Gemini represents Google’s “most capable and general model yet”, a multi-modal model that is essentially Google’s answer to OpenAI. Offered in three versions (Ultra, the most capable model; Pro, for scaling a wide range of tasks; and Nano, the smallest model for on-device tasks), Gemini represents the next evolution of Bard and performs highly in a number of dimensions. However, Gemini came under fire just weeks after launch due to inaccurate portrayals of historical figures, leading Google to issue a public apology and hit pause on generating people.

Gemma: Coming on the heels of Gemini, Google launched Gemini in Feb 2024 as a family of lightweight, state-of-the-art open models built from the same research and technology used to create Gemini. Gemma’s weights were released in two sizes (2B and 7B), each released with pre-trained and instruction-tuned variants.

Infrastructure Layer:

GCP AI Infrastructure: Through Google Cloud, customers can access a range of infrastructure tools to help deploy AI workloads, including GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units) through services like Google Kubernetes Engine (GKE) or Google Compute Engine (GCE).

Vertex AI: Vertex offers a gateway to accessing both Gemini and Gemma. The Vertex AI platform includes several tools like notebooks, evaluations, pipelines, MLOps, and training for developers to implement generative AI into production.

App Layer:

Duet AI: Google’s code assistance tool powered by Gemini to help software developers generate entire blocks of code, or complete code as its being written. Duet can be accessed through IDEs like Visual Studio Code and JetBrains.

Speech-to-Text: STT converts audio into text transcriptions and integrates speech recognition into applications through APIs, powered by Chirp, Google Cloud’s foundation model for speech.

TranslationAI: Cloud Translation API uses Google's neural machine translation technology to let customers dynamically translate text through the API using a Google pre-trained, custom model, or a translation specialized LLM.

Other: A complete list of Google’s AI apps (largely delivered through GCP) can be found here.

Snowflake:

With Frank Slootman stepping down as CEO and Chairman and Sridhar assuming the role of CEO, this marks a pivotal moment for Snowflake. Sridhar, formerly the Co-Founder of Neeva, an AI-powered search solution acquired by Snowflake, may give Snowflake a stronger “AI” brand. Snowflake is poised to leverage the GenAI wave, especially with their robust Data Cloud offering, but have not made strong moves at the model and application layers.

Model Layer:

None today.

Infrastructure Layer:

Snowflake Data Cloud: Customers can bring externally developed LLM’s and fine-tune these models against high-quality enterprise data that already resides in Snowflake. Developers can leverage Snowflake services to build custom intelligent applications.

Snowflake Cortex: Intelligent, fully managed service that offers access to industry-leading AI models, LLMs and vector search functionality to enable organizations to quickly analyze data and build AI applications. For enterprises to quickly build LLM apps that understand their data, Snowflake Cortex gives users access to a growing set of serverless functions that enable inference on industry-leading generative LLMs such as Meta AI’s Llama 2 model, task-specific models to accelerate analytics, and advanced vector search functionality.

Snowflake Snowpark: Snowpark is the set of libraries and runtimes in Snowflake that securely deploy and process non-SQL code, including Python, Java, and Scala. Snowpark allows custom software development, more reliable engineering deployment, and better partner integrations.

Streamlit: Snowflake acquired Streamlit in 2022 to democratize access to data and the creation of data apps. Streamlit allows data scientists and other non-technical users to interact with the data and build apps.

App Layer:

Not building at the application layer, but some could argue that several of their data visualization and analytic tools are more application offerings.

Databricks:

Founded in 2013 by the original creators of Apache Spark and MLFlow, Databricks has the distinction of being the youngest member of the “Big 5” cloud players, though that hasn’t stopped it from making a giant splash in the AI community. From its $1.3B acquisition of MosaicML to its annual Data + AI Summits, Databricks continues to bill itself as the destination of choice for data scientists, analysts, and developers building in AI.

Model Layer:

None today

Infrastructure Layer:

MosaicML: Databricks made perhaps the splashiest acquisition of the generative AI era when they bought MosaicML for $1.3B in July 2023. Through the acquisition, Databricks is now able to integrate a leading platform for creating and customizing generative AI models for the enterprise. MosaicML is used by hundreds of organizations and thousands of developers to pre-train and finetune large AI models at scale.

LakehouseAI: Lakehouse AI offers a unique, data-centric approach to AI, with built-in capabilities for the entire AI lifecycle and underlying monitoring and governance. LakehouseAI includes several features intended to help customers more easily implement generative AI use cases such as Vector Search, a curated collection of open source models; LLM-optimized Model Serving; MLflow 2.5 with LLM capabilities such as AI Gateway and Prompt Tools; and Lakehouse Monitoring.

App Layer:

Data Intelligence Platform: We are cheating a bit as this is more middleware than app, but Databricks’ Data Intelligence Platform combines generative AI with the unification benefits of a lakehouse to power a Data Intelligence Engine that understands the unique semantics of your data.

Conclusion:

The AI landscape among the major cloud providers is dynamic and rapidly evolving. While each of the Big 5 is strategically positioning themselves across the model, infrastructure, and application layers, their differing approaches reflect the diverse strategies employed to harness the potential of AI in driving innovation and empowering developers. Tune into our next edition when we discuss the implications and learnings from how each of these providers are building their stacks!

Please subscribe, and share the love with your friends and colleagues who want to stay up to date on the latest in artificial intelligence and generative apps!🙏🤖

Amazing write-up, lots of new technology here that I wasn't aware of. I recently thought a lot about Google Gemini, and wrote a little on the subject. Would love your thoughts on it, Vivek and Sabrina!

https://futuretelescope.substack.com/p/ai-as-a-product

Great overview of some of the major players. Amazon is the one I’m really interested in watching. Thanks for sharing