Reflecting on Our 2023 Predictions

Looking back on a wild year for AI

Thanks to our now 1.6K+ subscribers! We are making a big push for new subscribers as we approach the end of the year, so please subscribe and share with friends, family, colleagues, or anyone you think would enjoy staying current on the latest trends in AI :)

It’s incredible to think we just have one month to go in 2023. And what a year it’s been, especially in AI. While 2022 could be defined as the year in which AI more strongly entered the public consciousness, 2023 was the year Generative AI went mainstream.

Between the explosion of popularity in ChatGPT and other AI apps like MidJourney, the intense jockeying between the cloud giants to showcase their strength in AI, Nvdia’s soaring performance (with the stock up a cool 236% YTD), billions of venture dollars poured into the space, and of course the “whiplash week” at OpenAI that nobody predicted, there was no shortage of innovation and drama in the AI space this year…which made writing a bi-weekly AI newsletter that much easier :)

Around this time last year, we published our “5 Predictions for Intelligent Apps in 2023”. We thought it would be a great time to go back and review our predictions and grade ourselves after a truly spectacular year.

Without further ado, let’s get to it!

In December 2022, we made five predictions for what we expected to happen in 2023 (you can read the entire post here):

The Arrival of GPT-4

Generative Apps Go Mainstream

Costs of Training & Building Models Get Cheaper

Increased Regulatory Scrutiny & Established Regulations in AI Copyright

New Job Types are Created while Traditional Roles are Redefined

Let’s go through each one by one:

The Arrival of GPT-4

Did it happen? Yes

To be fair, this wasn’t a terribly difficult prediction to make. Rumors were flying as early as fall 2022 that OpenAI would be releasing the next version of its flagship large language model in the not-too-distant-future, and we predicted GPT-4 to arrive “sometime in the first half of 2023”; it was officially launched March 14, 2023. As the most advanced model OpenAI has made public to date (we’re still waiting on Q*…), GPT-4 is ~6x larger than its predecessor, rumored to have more than 1T parameters.

In our predictions, we felt GPT-4 would distinguish itself based on three major factors:

Availability of better and more recent training data → While it’s certainly true that GPT-4 is trained on a far larger corpus of data than GPT-3, interestingly its knowledge cut-off date for training data advanced only a few months from September 2021 to January 2022.

Optimality → No doubt about this one. GPT-4 outperformed ChatGPT by a wide margin, for example performing 90th percentile in a uniform bar exam and 99th in the Biology Olympiad (vs. 10th and 31st percentile for ChatGPT respectively).

Reduced biases → While GPT-4 certainly made improvements here, it is still prone to bias, though its hallucination rate is low.

Per AI Business

Generative Apps Go Mainstream

Did it happen? Kind of

Last year we predicted that while the second half of 2022 saw an explosion in GenAI applications like Jasper and ChatGPT, those apps were still mostly relegated to a relatively small group of early adopters or technologists dipping their toes in the water. We expected 2023 to be the year where generative apps truly went mainstream to the general public.

This is probably half-true. ChatGPT did the heaviest lifting in bringing AI to the forefront of public conciousness, where suddently everyone and their grandmother was using it (or at least talking about it). However, beyond ChatGPT, it doesn’t feel like there are “killer use cases” for AI used by the public on a day to day basis.

As we noted in our post “The End of the Beginning”, usage in former AI darlings like Jasper had begun to drop off in the summer as both competition heated up, and novelty began to wear (which is standard in any technology hype cycle). On the other end of the spectrum, stalwarts like MidJourney and Copilot remain incredibly popular, but within the confines of the tech-forward communities they are targeting (digital creatives in the case of MidJourney, and software developers for Copilot).

To be clear, it is incredible that AI applications have gone from effectively a cold-start to millions of users in less than two years. But what we’re really excited about is the next leap towards reaching billions of users.

3) Costs of Training & Building Models Get Cheaper

Did it Happen? Yes.

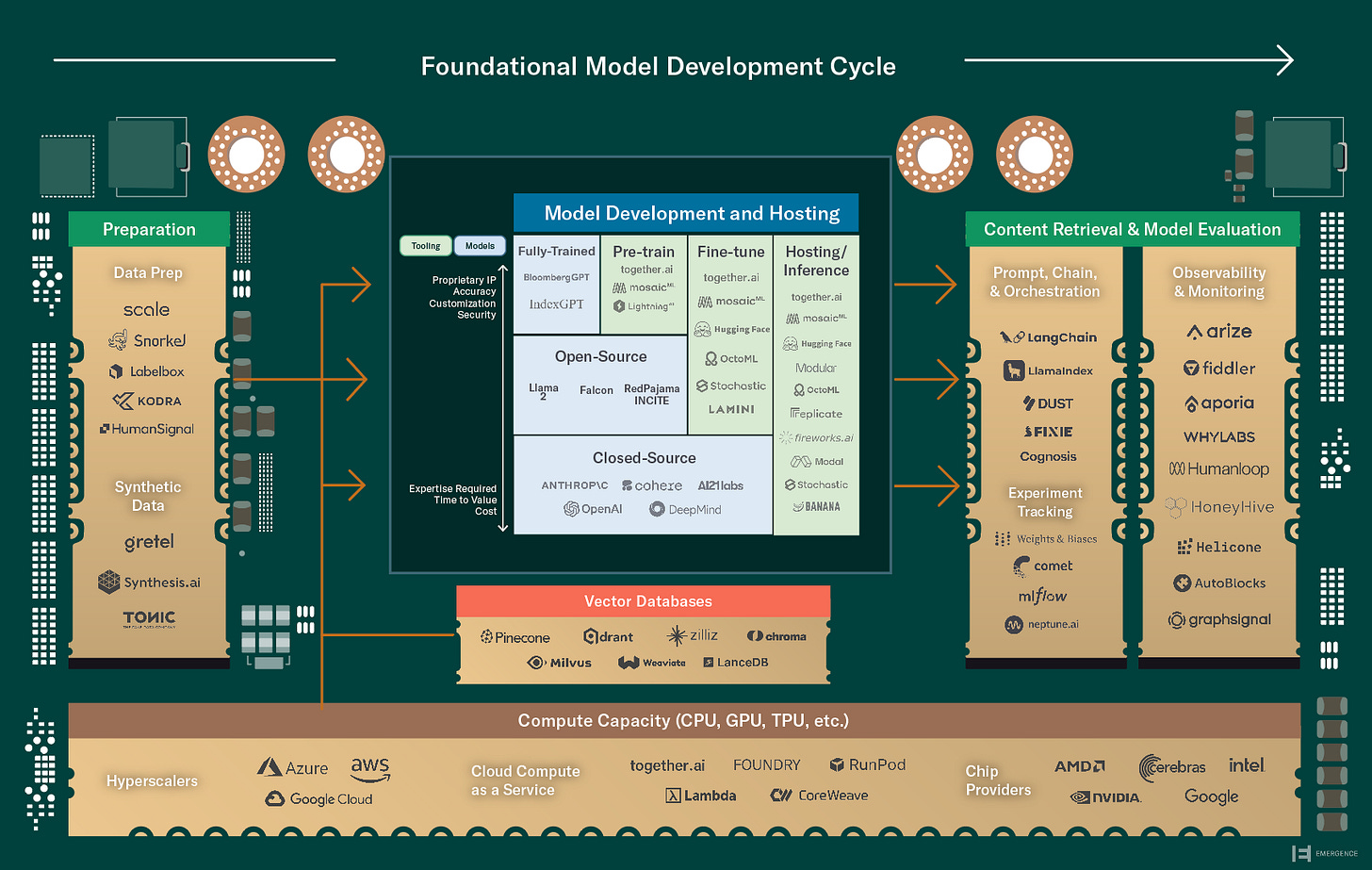

In 2022, training GPT-3 reportedly cost upwards of $12M for a single training run. Our sense at the time was that there would be three avenues leading to these costs being lowered: 1) Model hosting costs would meaningfully decline; 2) Models would become more dispersed beyond the hyperscalers and BigTech; and 3) a new category of ML infrastructure optimization would emerge. It certainly feels like there was progress on all three of these fronts in 2023.

Model hosting → We saw a proliferation of specialized third-party providers purpose-built for offering cloud GPUs to host and run models, including fast-growing startups like Coreweave, Lambda Labs, Runpod, Radium, and others. While costs remain high, there are far more alternatives both at the silicon layer and at the model hosting layer, and our belief is costs will only continue to fall into the future.

Dispersion → While OpenAI remains prominently at the center of the AI universe, they are far from the only foundation model game in town. 2023 saw the growth of several other closed-source models including Anthropic and Inflection, as well as a boom in open-source models from Meta’s Llama, Adept, Mistral, and many others [see our post on closed vs. open-source models here].

Infra Optimization → 2023 was the year that model infrastructure tools rose to prominence, perhaps evidenced best by Databricks’ acquisition of MosaicML for $1.3B in July. The deal highlighted the need for “best-in-class experience(s) for training, customizing, and deploying generative AI applications”, spurring both customer and investor interest in a range of tools in this space, including OctoML, Modal, Fireworks, Together.ai, and many others.

4) Increased Regulatory Scrutiny & Established Regulations in AI Copyright

Did it Happen? Kind of.

Throughout the year there was plenty of discussion surrounding copyrighting infringement. Last year, we were already seeing several class action lawsuits starting to take place such as the GitHub Copilot Class Action lawsuit, where GitHub was accused of scraping licensed code to build GitHub’s AI-powered Copilot. Since then, we have seen increased regulatory scrutiny and pressure from Enterprises to ensure that the outputs generated by the LLMs and AI Apps they used would not get them in legal trouble or IP-related lawsuits. As a result, several large AI companies offer copyright indemnification for their services. For example:

Adobe: Announced in June 2023 that they would offer copyright indemnification for Firefly AI-based images. Adobe is confident that their images are designed to be “safe for commercial use” and thus is offering this IP indemnification for any legal issues arising from its use.

OpenAI: Announced they would defend their products that face claims around copyright infringement as it pertains to the OpenAI apps and services. Copyright Shield is OpenAI’s latest program where OpenAI will pay for all legal costs incurred by customers.

With that being said, there were no specific AI copyright regulations that passed. The closest demonstration of established regulation was President Biden’s announcement of the Executive Order (EO) on AI. In summary, the EO on AI’s goal is to promote “safe, secure, and trustworthy development and use of artificial intelligence”. The EO itself however is a lengthy document that is vague on the potential implications and regulation that may pass. The EO leaves a lot of room for interpretation for those companies leveraging AI. EY discusses a few of the key guiding principles of the document here.

So, while there may not have been as much “official regulation” passed, there was increased regulatory pressure and scrutiny. We expect this will continue into the new year, but will be an evolving discussion and debate around how to specifically regulate.

5) New Job Types are Created while Traditional Roles are Redefined

Did it Happen? No.

Towards the end of 2022, many AI-forward companies, like ScaleAI, were starting to hire “Staff Prompt Engineers”. Originally, we had predicted that similar to how the explosion of big data in the past decade led to the creation of several new positions (data engineer, data analyst, etc. ), foundation models and intelligent applications would herald the arrival of newly created positions like Prompt Engineer and Chief AI Officer.

However, we did not see these roles become as widely adopted as expected. One of the reasons we think that the “prompt engineering” roles did not take off in ways we predicted is in part due to the ways of working with models has changed. The idea of having a role dedicated only to prompt engineering today feels a bit silly as there are now many techniques for prompting, fine-tuning, evaluating, and experimenting with models. Working with different models and experimenting with different techniques are part of broader workflows that are managed by ML engineers. We believe this will be somewhat akin to software engineers programming in different languages and working on front-end vs. back-end applications, etc. So, while there might not be officially new “roles” created, we could envision a world with “specialties” for each position.

While there were not just “prompt engineering” jobs created, we have seen an increased demand in jobs around AI/ML. A few roles we have seen more regularly pop up include ML/AI Research Engineer, ML/AI Research Scientist, and Chief Scientist Officer.

Conclusion

Overall, we’d give ourselves a 3/5 for our 2022 predictions. While a handful proved accurate, such as the anticipated arrival of GPT-4 and the reduction in training costs + model dispersion, the widespread emergence of new job roles specific to AI ultimately did not manifest as envisaged (at least yet!). Generative apps did gain traction, especially with ChatGPT's prominence, yet achieving true mainstream integration remains an ongoing journey. The discourse around AI copyright and regulatory pressures did intensify but concrete, universally applicable regulations didn't solidify as expected.

As we reflect on the past year, we thought it was also worth highlighting key AI trends from 2023 that we were not expecting:

Open-source models proliferating → OSS models rapidly grew in popularity, traction, and increased performance. Reference more from our previous article here.

New techniques for optimization → We saw several new and innovative techniques for optimizing LLMs emerge. For example fine-tuning, RLHF, RAG, LoRA, Vector embeddings, and more.

Big ticket M&A → We witnessed a series of substantial acquisitions throughout the year, with prominent names like MosaicML, CaseText, and Neeva making headlines for their notable purchase prices.

BigTech moving quickly → Unlike prior technological waves, BigTech moved quickly to integrate AI and innovate with the nimbleness of a startup, at both the enterprise level like Microsoft and Databricks, as well as consumer-focused businesses like Canva and Notion.

Tune in to our next post as we share our 5 key predictions for 2024!!

We hope you enjoyed this edition of Aspiring for Intelligence, and we will see you again in two weeks! This is a quickly evolving category, and we welcome any and all feedback around the viewpoints and theses expressed in this newsletter (as well as what you would like us to cover in future writeups). And it goes without saying but if you are building the next great intelligent application and want to chat, drop us a line!