Podcast Drop: A Conversation with May Habib

What the Founder & CEO of Writer has to say about founding a GenAI prior to the ChatGPT craze, building an enterprise product with 200% NDR, and much more

Please subscribe, and share the love with your friends and colleagues who want to stay up to date on the latest in artificial intelligence and generative apps!🙏🤖

Last week we had the chance to sit down with May Habib, founder and CEO of Writer. Writer is a full-stack generative AI platform, where they’ve built a proprietary family of models called “Palmyra”, purpose-built for the enterprise. Customers as varied as L’Oreal, Intuit, Cisco, Accenture, and Johnson & Johnson all use Writer to embed generative AI into their business processes in a secure, safe, and compliant way. Since its founding in 2021, Writer is one of the fastest-growing generative AI companies and has attracted $126M in funding from leading investors like ICONIQ and Insight Partners.

May is a second-time founder, and we had an opportunity to talk to her about everything from:

Her journey from working as a Wall Street analyst at Lehman Brothers to founding Qordoba and Writer

Why she believes a graph-based approach to knowledge retrieval is superior to traditional vector-based RAG

How to build an enterprise-grade AI product with 200% (!) net dollar retention

And much more. You can watch or listen to the podcast below and read the full transcript here, but in this post we’ve summarized our key learnings and takeaways.

What does Writer do?

Writer is a full-stack generative AI platform focused on serving Enterprise customers. Writer helps customers create, analyze, and govern content to achieve greater business impact. Companies use Writer for a variety of different use cases including generating copy and documents enhanced by enterprise-specific data, building custom internal apps for support, writing product descriptions, analyzing medical records, and much more. Unlike many companies that leverage foundation models out of the box, Writer is truly a full-stack Generative AI company.

Writer is built off a proprietary tech stack that includes three key components:

Palmyra: This is Writer’s proprietary-built family of models aiming to be transparent and auditable, faster and more cost-effective, and achieves top performance on key benchmarks.

Knowledge Graph: Integrates with company data to deliver high-quality outputs and insights based on a company’s internal data sources. Allows companies to connect in their proprietary datasets to create more company-specific content.

AI Guardrails: Ensures work is compliant, accurate, inclusive, and on brand. These guardrails enable the users to stay within company guidelines.

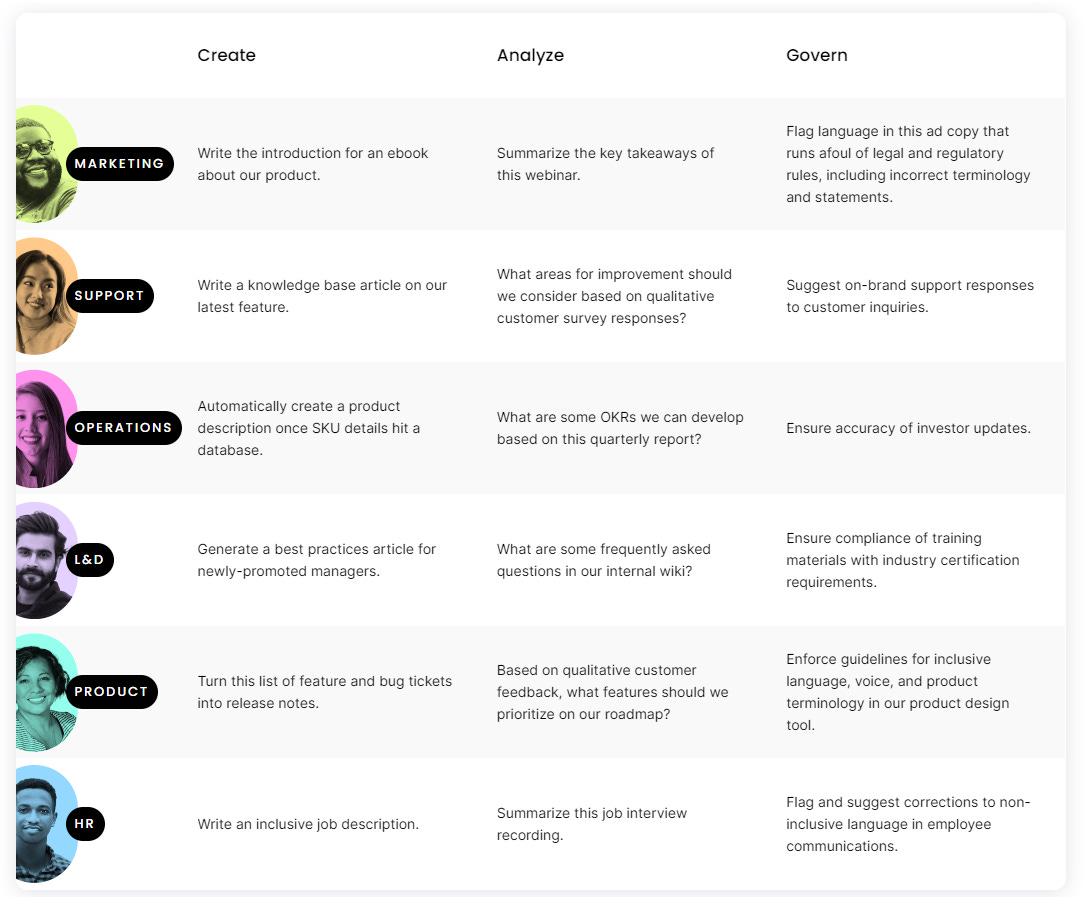

Writer operates across various industries, including financial services, healthcare, life sciences, e-commerce, retail, and technology. They offers solutions spanning different departments within organizations, including marketing, support, operations, R&D, product, and HR teams, to deliver impactful outcomes.

Notable use cases include:

Create: Writer facilitates content creation across diverse formats. This includes crafting blog posts, composing outbound emails, developing product or job descriptions, among other content types. Additionally, Writer aids in building customized applications utilizing composable UI elements that align with the company's voice and brand identity (e.g., building an insurance claim form).

Analyze: Writer helps companies analyze and summarize content that they already have (i.e., create an app that helps marketers repurpose a webinar into a short-form video or summarize customer product feedback).

Govern: Writer ensures that all content outputs adhere to appropriate standards for the business. This involves flagging advertising copy that doesn't comply with legal regulations, providing on-brand responses for customer support tickets, and implementing other governance measures to maintain consistency and compliance.

May shared several key learnings on how Writer is transforming Generative AI for the enterprise:

On being able to control the end-user experience through a vertical approach:

It's really hard to control an end user experience in generative AI if you don't have control of the model. And given that we had chosen the enterprise lane, uptime matters, inference matters, cost of scale matters, and accuracy really matters. We all really deemed those things early on pretty hard to do if we're going to be dependent on other folks' models. With the multimodal ingestion to text and insight production, we made a strategic call almost a couple of years ago that we're going to continue to invest in remaining state-of-the-art. Today, our models are from the Palmyra-X general model, to our financial services model, to our medical model, and our GPT-4 zero-shot equivalent..

We've had to own every layer of the stack. We built our own large language models. They're not fine-tuned open source, and we've built them from scratch. They're GPT-4 quality, so you've got to be state-of-the-art to be competitive. But we've hooked up those LLMs to the tooling that companies need to be able to ship production-ready stuff quickly.

On avoiding the fate of being labeled a “GenAI wrapper”:

Around ChatGPT time, I think there was a fundamental realization among our team, and we wrote a memo about it to everybody and sent it to our investors, that real high-quality consumer-grade multimodal was going to be free. It was going to go scorched earth. That was clear, and it has come to pass. The other truths that we thought would manifest that we wrote about 15 months ago, every system of record coming up with adjacencies for AI that the personal productivity market would be eaten up by Microsoft. And so for us, what that really meant was, how do we build a moat that lasts years while we deepen and expand the capabilities of the platform? And so what was already happening in the product around multifunctional usage right after somebody had come on, we basically were able to use that to really position horizontal from the get-go

We've got this concept of headless AI where the apps that you build can be in any cloud. The LLM can be anywhere. The whole thing can be in single tenant or customer-managed clouds, which has taken 18 months to come together. We will double down on enterprise, security, and state-of-the-art models. But the difference is that in a world of hyperscalers and scorched earth, all the great things OpenAI is doing are super innovative, and every other startup is trying to get a piece. The bar for differentiation went way up 15 months ago for everybody.

On why traditional vector-based RAG doesn’t always work when context matters:

If embeddings plus vector DB were the right approach for dynamic, messy, really scaled unstructured data in the enterprise, we'd be doing that, but it didn't, at scale, lead to outcomes that our customers thought were any good. If you're building a digital assistant for nurses who are accessing both a decade-long medical history against policies for a specific patient, against best practice, against government regulation on treatment, against what the pharmaceutical is saying about the list of drugs that they're on, you just don't get the right answers, when you are trying to chunk passages and pass them through a prompt into a model.

When you're able to take a graph-based approach, you get so much more detail. Folks associate words like ontologies with old-school approaches to knowledge management, but especially in the industries that we focus on and regulated markets and healthcare and financial services. Those have really served those organizations well in the age of generative AI because they've been huge sources of data so that we can parse through their content much more efficiently and help folks get good answers. When people don't have knowledge graphs built already, we've trained a separate LLM. It's seen billions of tokens. So this is a skilled LLM that does this, that actually builds up those relationships for them.

On the worst advice she’s received as a founder:

The worst advice that I have taken was early in Qordoba days, hiring VPs before we were ready. It felt like a constant state of rebuilding some function or other on the executive team. That's such a drain. We have an amazing executive team, we've got strengths, we've got weaknesses. We're going to learn together. This is the team. And it's why we spend so long now to fill every gap. We've got a head of international, CFO, CMO. We're going to take our time and find the right fit. But those were hard-won lessons. The advice that we got recently that we didn't take, was to not build our own models. And I'm really glad we didn't take that advice.

You can follow May on LinkedIn or X, and check out Writer’s “Humans of AI” podcast here!

We hope you enjoyed this edition of Aspiring for Intelligence, and we will see you again in two weeks! This is a quickly evolving category, and we welcome any and all feedback around the viewpoints and theses expressed in this newsletter (as well as what you would like us to cover in future writeups). And it goes without saying but if you are building the next great intelligent application and want to chat, drop us a line!