Moats in the Era of AI

As Generative Apps continue to multiply, how do they differentiate?

Economic moats refer to the long-term competitive advantage that a company holds that protects its position in the marketplace. Businesses can have all sorts of different moats: superior intellectual property (Apple), network effects (Facebook), data advantages (Google), wide distribution (Microsoft), brand (Hermes), etc. The important point is that you need a moat (or more likely a combination of moats) to survive in a competitive market.

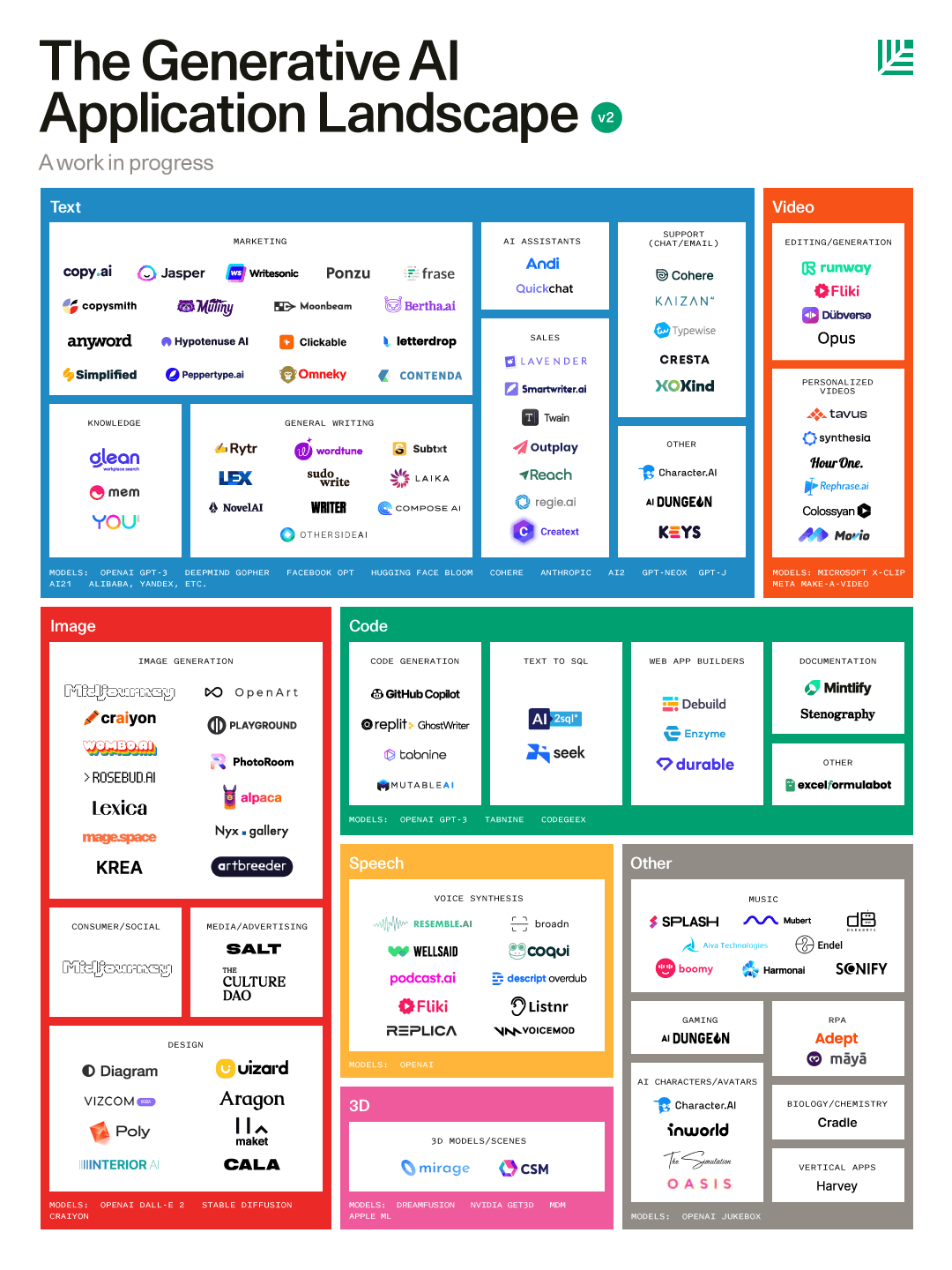

So how should we think about competitive moats for intelligent apps? It seems like we are seeing a new “AI” company springing up nearly every day. Just look at the open-sourced spreadsheet NFX has assembled mapping the various startups building in generative AI; launched only in December, the list already includes 500+ companies (1/4th of which are building text-based products). Clearly this is the party everyone wants to join.

But as with any other competitive market in the history of commerce, the companies that survive (and thrive) are the ones who can build deep and endurable moats, whether they be at the foundation model layer, infrastructure and tooling, or end-user applications.

Let’s dive in and look at five key moats and how they apply to various levels of the intelligent apps stack.

Which Moats Matter?

Let’s look at five standard business moats and how they can be applied to intelligent apps:

1. Intellectual Property & Technology

Companies can build moats around intellectual property (IP) through novel technological innovations protected by patents, copyrights, and trademarks. We refer to IP moats in the broader sense of building a product that has new and useful tech at its core, and simultaneously is difficult (if not impossible) for competitors to replicate or introduce into their own products. IP moats can be incredibly resilient and rewarding over the long term; Apple spends billions of dollars on R&D each year to build and create new products, and also maintains one of the strongest IP policies in the tech industry to protect the fruits of that R&D.

In the world of intelligent apps, having great technology clearly matters, but the strength of a company’s core tech IP can vary quite a bit. Foundation models are often built using years of academic research, and companies producing FMs need to attract top engineering talent across AI and deep learning. For example, looking at OpenAI’s LinkedIn headcount shows nearly half of its ~450 employees are in engineering or related functions. Compare this with an end-user application like Jasper where Sales, Marketing, and Business Development roles roughly equal (and may soon surpass) the number of engineers; one inference to draw here is that given Jasper was originally built solely on GPT-3, it required less technical product depth to rocket out of the gates.

In another lens, Anthropic is building a “harmless” AI assistant using a novel method they developed called “Constitutional AI”, reducing the need for humans as part of the Reinforcement Learning process; producing a foundation model using a novel training approach clearly is an indication of a technological moat.

This is not to say that end-user apps won’t, or shouldn’t, worry about IP moats. Rather, IP moats will likely be more of a distinguishing characteristic at the foundation and infrastructure levels…today.

Advantaged: Teams that can access top-tier AI and ML talent, and products built on defensible intellectual property through novel technological innovations

Disadvantaged: Copycat apps leveraging only third-party foundation models and infrastructure, and trying to distinguish solely through the user experience

2. Network Effects (Data > User)

User network effects refer to the phenomenon in which a product or service gets better with each additional user that joins. The canonical example is a social media platform like Facebook or Tik Tok - nobody cares about being part of a network where just a few other people are on (there’s less content to interact with), but the value of being on the network increases with each new person that joins. (Of course, that could be debated today given the various issues many of these social platforms face as they hit critical mass).

User network effects don’t appear to be as meaningful in the world of intelligent applications. ChatGPT isn’t necessarily better after the 10th or 1 millionth person interacts with it if they are asking the same questions. But the resulting data network effects, however, are clearly meaningful. Every additional data point entered into ChatGPT and the way the user reacts to ChatGPT’s responses serves to make the entire machinery smarter. The same can be said of any application powered by a machine learning model; generally speaking, the more (and higher quality) data that can be fed into the models, the more highly personalized and customized the product can serve its users.

Advantaged: FMs trained on vast quantities of high-quality data, and end-user applications finetuned using proprietary first-party user data

Disadvantaged: Apps solely built on other foundation models without a proprietary dataset, and tools whose ML models don’t get better with more data (we tend to agree with Yuri at Wayfinder Ventures below)

3. Distribution

Having a great product alone doesn’t necessarily translate to building a successful business. Companies need to be able to deliver that product to their customers in a meaningful way. This is what distribution refers to the ability of companies to reach their customers effectively and efficiently. Distribution advantages can be incredibly powerful. In Zero to One, Peter Thiel goes as far as to say that “Superior sales and distribution by itself can create a monopoly, even with no product differentiation. The converse is not true.”

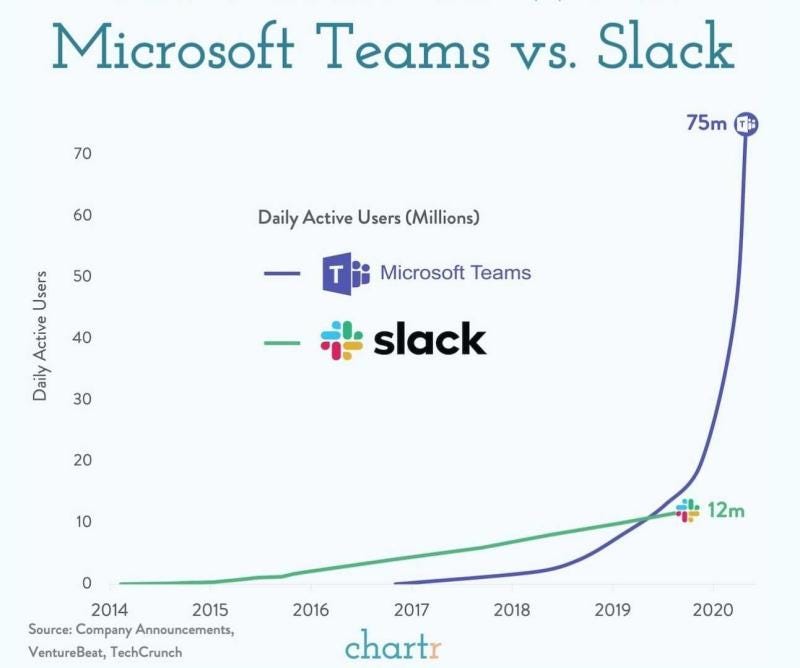

Distribution moats tend to advantage incumbent products that have already built out wide and deep networks. Take for example Microsoft Teams vs. Slack; while Teams launched several years after Slack (and many consider it an inferior product), Microsoft leveraged its massive user base to quickly roll out Teams as the default collaboration app on Windows. You can see how stark a difference great distribution can make…

We are seeing something similar play out now with AI. Apps that have already accumulated millions of users are finding ways to incorporate AI into those apps and workflows. For example in November, the collaboration app Notion introduced “Notion AI”, a writing assistant to help “write, brainstorm, edit, summarize, and more” to their existing 30M+ user base. Microsoft, the king of distribution, is in close partnership with OpenAI (as well as a major investor), and recently announced the general availability of Azure OpenAI Service, giving the many millions of Microsoft users worldwide access to advanced AI models, ChatGPT, and other key OpenAI offerings. As intelligent and generative apps continue to proliferate, all players need to think about how to build distribution advantages (or partner with those that have it) in order to thrive.

Advantaged: Incumbents with existing large, sticky user bases, and companies with novel AI product depth that can partner with (or sell into) those incumbents

Disadvantaged: Startups whose products have low switching costs and compete directly with

4. User Experience

We refer to UX as the style in which end users interact with a company’s products and services. Good UX should consider how easy it is for the end user to accomplish their desired tasks in the most efficient and pleasant way possible. Apple is famous for being one of the most innovative companies around UX – they created an extremely sleek interface that has driven customer adoption and long-term stickiness.

Having a well thought out UX is a critical component of creating long-term customer loyalty and a sustainable moat in the evolving world of Intelligent Applications. Even for “simple” text based generative apps, the end-user is still tasked with i) prompting the model, ii) controlling the workflow, and iii) selecting the correct outputs. While this may seem like a simple task, the user doesn’t always know how best to interact with the model without some guidance (imagine getting a paint brush and blank canvas - where would you start?). It is also worth noting that FMs don’t have 100% accuracy, so it’s important that the end user understands how to interact with the model (prompt engineering) and negotiate the fallacies to eventually obtain the correct output.

Generative AI companies that have a strong UX can build an enduring moat and drive customer stickiness. Below are a few key questions to keep in mind when thinking through UX:

How do you create a delightful experience that requires little cognitive effort on from the end user? How do you allow the user to have some level of creative control, but not so much that they have no idea what to prompt the model to do?

How do you avoid showing biased, incorrect, or irrelevant suggestions that might tell the end user that this model has completely gone crazy?

How do you rank the different suggestions based on the end user’s preferences while not confusing them as to why there are multiple outputs?

Advantaged: Companies with strong product functions, a commitment to thoughtful design, and apps that are in sync with the user’s workflows

Disadvantaged: Products over-indexed to the technical capabilities of the model, foundation models and infrastructure tools geared towards technical users

5. Reinforcement Learning With Human Feedback (RLHF):

Generative AI companies need to produce relevant outputs that help the end-user solve problems, but off-the-shelf FMs don’t always produce the best or most accurate outputs. RLHF is a process in which companies can incorporate human feedback to solve deep reinforcement learning tasks. By involving human feedback, models can produce more relevant and “correct” outputs.

When thinking of RLHF, there are two main components. The first is around generation (get the model to generate 100s or 1000s of responses to a given prompt). The second is relevance (which of these generated responses is most relevant to the problem you are trying to solve). In order for a company to test which of the prompts are most relevant, humans perform testing on the answers. Humans (i.e., Mechanical Turk) may generate a “gold standard” of what the desired model behavior/output should look like and then ranks the different outputs based on the model behavior. From there, companies can leverage the data to fine-tune the model and produce more desirable outputs.

In order to create a sustainable moat in Intelligent Apps, companies will need to leverage RLHF so the app creates the best output for the end customer with minimal effort. With ChatGPT, Open AI took prompts from their customers, received many responses, and had human judges grade responses based on what they thought was the most relevant answer. From there, they built a re-enforcement learning algorithm to make ChatGPT do more of what the end-user wanted without specific prompting. By doing so, ChatGPT generated much more helpful outputs in response to user instructions, and has been able to create a more sustainable moat. Iterating on the relevance model for generative apps can be a way to build an enduring technical moat.

Advantaged: OpenAI and other Enterprises that can afford humans, annotators, or Mechanical Turk to create more evaluation datasets and further improve the model

Disadvantaged: Smaller startups that are less technical or who may not have as many resources to constantly create new evaluation datasets

So What Happens?

What do we think matters the most at each layer of the stack?

End User Applications → Distribution, UX/UI, RLHF, and access to proprietary data

Tooling and Infrastructure → Data network effects and bottoms-up adoption from the developer community

Foundation Models → IP / Tech, model accuracy, and better/faster/cheaper model performance

At the end of the day, we believe there can (and will) be multiple winners at each layer of the stack. What trait these winners will share is the ability to build deep and endurable moats in this new era of AI.

Another excellent article to read on this topic is “The Missing Moat in Generative AI” by Connor Phillips.

Funding News

Below we highlight select private funding announcements across the Intelligent Applications sector. These deals include private Intelligent Application companies who have raised in the last three weeks, are HQ’d in the U.S. or Canada, and have raised a Seed - Series E round.

New Deal Announcements - 01/06/2023 - 01/19/2023:

We hope you enjoyed this edition of Aspiring for Intelligence, and we will see you again in two weeks! This is a quickly evolving category, and we welcome any and all feedback around the viewpoints and theses expressed in this newsletter (as well as what you would like us to cover in future writeups). And it goes without saying but if you are building the next great intelligent application and want to chat, drop us a line!

Great write up. Some AI use cases seem to cause more friction than they are worth. I am sure this will improve. Salesroom (backed by Craft) is doing something very bold - diving into Sales Enablement and live coaching with a play that takes the Grammarly model and applies it to live meetings and changes up all those Zoom calls we have been on the past two years.