Is This The Year Apple Awakens in AI?

With $160B+ of cash and an unrivaled R&D budget, what should we expect from Apple in the AI world?

Please subscribe, and share the love with your friends and colleagues who want to stay up to date on the latest in artificial intelligence and generative apps!🙏🤖

At the end of 2023, we shared five of our key predictions for 2024. One that seemed to resonate was that this would be the year that Apple “awakens” in AI. Despite a long history of consistently releasing category-defining products (remember when the iPhone came out?), they had been far quieter than their BigTech peers in the generative AI domain.

We believe that will change in 2024.

With ~$160B of cash on its balance sheet (more than the entire GDP of Morroco), and an R&D budget that exceeds Paypal’s total annual revenue, few companies are better poised to lean into a technological sea change like AI.

Lest we forget just how good Apple is at launching new devices, they reportedly sold out of Vision Pros less than three days after they became available for pre-order. Initial estimates peg sales at 160,000-180,000 units sold….despite a hefty $3,499 price tag ($4K+ if you include the extras) which many industry observers scoffed at. If the Vision Pro teaches us anything, its that you can never count Apple out - even in AI.

Let’s dig into what Apple could be cooking up this year…

Apple runs AI directly on its hardware instead of in the cloud.

On December 12, 2023, Apple released a research paper entitled “LLM in a Flash”. The premise of the paper highlights how they are experimenting with ways to design and optimize inference efficiency to run on battery-powered devices (such as iPhones). While the common approach has been to make LLMs more accessible by reducing the size of the model, this paper presents a new method to run LLMs using fewer resources, specifically on a device that does not have enough memory.

The paper highlights how:

The standard approach is to load the entire model into DRAM; however, this limits the maximum model size that can be run. For example, a 7 billion parameter model requires over 14GB of memory just to load the parameters in half-precision point format, exceeding the capabilities of most edge devices.

To address this limitation, we propose to store the model parameters in flash memory, which is at least an order of magnitude larger than DRAM. Then, during inference, directly load the required subset of parameters from the flash memory, avoiding the need to fit the entire model in DRAM.

This is a major development, paving the way for efficient deployment of LLMs on smaller devices like iPhones. While LLMs have gained popularity, their widespread use depends on finding a natural and convenient distribution channel. What better channel than through the smartphone?

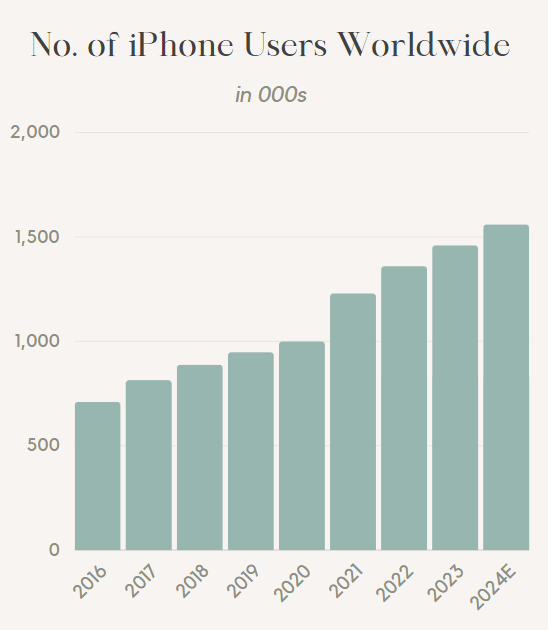

The iPhone, with its massive user base of over 1.4 billion worldwide, presents a prime opportunity for Apple to establish a new distribution and monetization channel. Enabling LLMs on iPhones could open up possibilities for Apple to generate revenue and explore new business models, with both models and associated apps being run on their iPhone devices.

Apple launches new multi-modal models.

In October 2023, Apple released an open-source multi-modal LLM in collaboration with researchers from Cornell, this model was known as “Ferret”. Ferret is proficient in analyzing and describing small image areas, thus allowing for more contextual responses and offering deeper insights into visual content.

At the highest level, here is how the Apple Ferret LLM works:

Visual Ingestion: Ferret doesn’t limit to just contextual comprehension, but analyzes specific regions of images and identifies elements within them.

Contextual Responses: When asked to identify an object within an image, Ferret not only recognizes the object but leverages surrounding elements to provide deeper insights and context, going beyond object recognition.

We believe this image-based interaction may enable more sophisticated and contextual interactions where consumers can ask questions about images or visual content. For instance, Ferret could power advanced visual search capabilities across photos and videos, leading to personalized experiences in areas like food, travel, playlists, movies, or content.

Given the ease of capturing photos and videos on the iPhone, this advancement could be a significant breakthrough for Apple. Imagine taking a photo and receiving 10 personalized product recommendations based on it. Or, imagine having your Apple Vision Pro headset on and talking directly to a new version of Siri that can give real-time recommendations.

With Apple's wealth of consumer data and insights into app usage, the potential for a transformative, personalized AI assistant (Siri 2.0?) becomes even more compelling. An assistant that effortlessly connects across all segments of your life, offering a truly personalized experience based on the content you love to watch, read, or listen to. After all, Apple holds the keys to a vast trove of consumer data and can seamlessly connect across all our apps.

Apple will compete head-on with “AI-Native” products across both Hardware and Software:

Hardware: We are starting to see more “AI Hardware” products emerge, such as the Rabbit R1, a “personalized operating system with a natural language interface”…essentially a lightweight pocket companion powered by AI. The Rabbit took CES by storm, and the $199 device quickly zoomed to $10M in pre-orders.

It’s terrific to see a renewed interest in hardware, especially at lower price points like Rabbit. but it’s hard to imagine that Apple won’t be throwing its weight around in AI-centric consumer hardware, particularly given their global supply chain and best-in-class supplier relationships.

Where do we think Apple could compete on hardware (beyond the iPhone)? The Vision Pro is a good example of Apple’s push into new categories like spatial video and audio. Its possible that future versions of Vision Pro will run on Apple’s own AI chips, or allow users to leverage AI akin to Meta/Ray Ban’s Smart Glasses. In other devices, will AI headphones still resonate when Airpods incorporate embedded models? What happens when multi-modality reaches the Apple Watch?

Software: Mark Gurman, an Apple reporter at Bloomberg, wrote that Apple is likely to announce several GenAI offerings during its Worldwide Developers Conference in June:

Apple is eyeing adding features like auto-summarizing and auto-complete to its core apps and productivity software such as Pages and Keynote. It also is working to merge AI into services like Apple Music, where the company wants to use the technology to better automate playlist creation. And Apple is planning a big overhaul to its digital assistant, Siri.

Of course, these aren’t perfect substitutes and there will always be room for third-party apps (how else will the App Store make money?), but it will be interesting to see how Apple enhances its existing apps with AI, or release new apps that compete directly with AI startups.

Conclusion

With $160B of cash, 250K+ highly motivated and talented employees, 550+ retail stores, a world-class supply chain, and a 15+ year run of releasing chart-topping consumer products, few companies are better positioned than Apple to take advantage of the AI wave.

It’s not a question of if, but when.

We hope you enjoyed this edition of Aspiring for Intelligence, and we will see you again in two weeks! This is a quickly evolving category, and we welcome any and all feedback around the viewpoints and theses expressed in this newsletter (as well as what you would like us to cover in future writeups). And it goes without saying but if you are building the next great intelligent application and want to chat, drop us a line!

It’s interesting that Apple is taking a different (and smarter approach to deploying their LLM tech). Rather than shipping a substandard product and tarnishing their brand (like Google Bard), they quietly deployed Ferret in Q4 of last year and asked users on GitHub to play with it. Apple is definitely working behind the scenes. With iPhone purchases down year over year, especially in China, I bet we see them bring AI to the marketplace in a big way this year

Great article as always!

hi vivek sir and sebrina mam i am sohan i am building a new AI start up. which is new and advanced product than open AI and anthropic. you guys are perfect to discuss about it. If you are interested reach me at LinkedIn www.linkedin.com/in/sohan-krish-167960240