Is Seeing Still Believing?

The rapid rise of deepfakes in the AI era, and what we can do about it

The Indian elections just concluded, and beyond the monumental achievement of having 600M+ people go to the polls despite a historic heat wave, the elections may equally be remembered for the vast amount of deepfakes employed during the election.

Per a recent NBC News article:

In what has been called India’s first “artificial intelligence election,” significant campaign funds were spent in hiring artists such as Nayagam, chief executive of the Chennai-based start-up Muonium AI, to deploy deepfakes promoting or discrediting candidates that spread like wildfire across social media.

These videos use artificial intelligence to generate believable but false depictions of real people saying or doing just about anything creators want, from a video of Modi dancing to a Bollywood song to a politician who died in 2018 being resurrected to endorse his friend’s run for office.

While deepfakes are not a new concept, advances in AI mean they can now be produced at an unprecedented scale, at higher qualities and significantly higher likelihood of deceptivity. And deepfakes are not only a concern in a year of global elections, but an ongoing and persistent threat spanning the corporate, entertainment, and consumer worlds.

Consider a recent criminal case in Maryland, where a high school athletic director created a fake recording of the school’s principal making racist and antisemitic comments using OpenAI and Bingchat.

The audio clip quickly spread on social media and had “profound repercussions,” the court documents stated, with the principal being placed on leave. The recording put the principal and his family at “significant risk,” while police officers provided security at his house, according to authorities.

The recording also triggered a wave of hate-filled messages on social media and an inundation of phone calls to the school, police said. Activities were disrupted for a time, and some staff felt unsafe.

With the advent of advanced AI, we are now also entering a “golden age” for deepfakes, where anyone from celebrities to the everyday person can have their likeness altered for malicious or deceitful purposes.

So what really are deepfakes anyway and why should we care?

Lets’s dig in.

What Are Deepfakes?

The origin of the term “deepfake” emerged from a video that surfaced in late 2017, showing the face of actress Gal Gadot superimposed on an existing pornographic video. An anonymous Reddit user, who referred to himself as “deepfakes,” claimed to be the creator of this video.”

Today, deepfakes refer to any digital media (images, video, audio, and even text) that have been manipulated using AI to simulate or alter a specific individual or the representation of that individual. Deepfakes are a subset of “synthetic media”, which refer to any media synthetically altered through digital or artificial means (think of a song that has been digitally remixed). Deepfakes stand out for their deliberate purpose in depicting an individual or group to say or do something they never actually did.

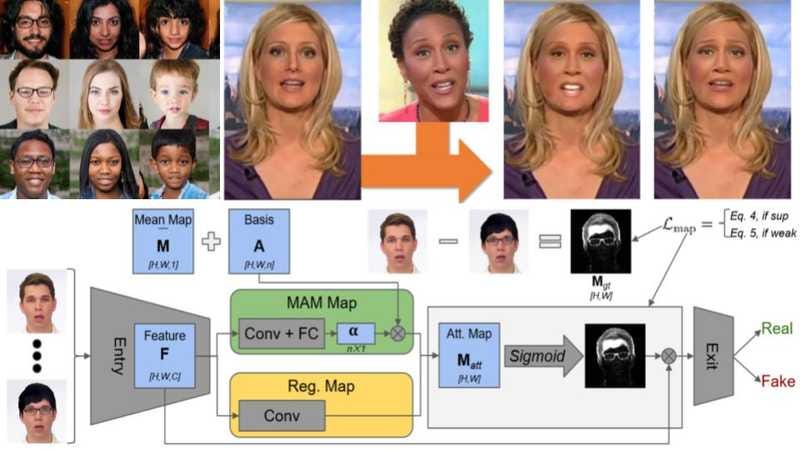

One of the technological catalysts for deepfakes is Generative Adversarial Networks, or GANs. GANs are a powerful class of neural networks used in unsupervised learning, and employ adversarial training to produce artificial data that is identical to actual data.

A GAN works by two AI models competing with each other to create as “accurate” or authentic of a deepfake output as possible. The two models – one a generative and one a discriminating model – create and destroy in tandem. The generative model creates content based on the available training data to mimic the examples in the training data. Meanwhile a discriminative model tests the results of the generative model by assessing the probability the tested sample comes from the dataset rather than the generative model. The models continue to improve until the generated content is just as likely to come from the generative model as the training data. This method is so effective because it improves the outcome of its own authenticity by constantly checking against the very tools designed to outsmart it.

Deepfakes are emerging across a wide range of modalities, including:

Face swapping → To date, the most common form of deepfakes where one person’s face is convincingly superimposed onto another. Consumer apps such as Snapchat integrate face-swapping technology often used for entertainment purposes, though advanced AI techniques can be used for malicious purposes (or create new faces entirely).

Voice synthesis → Generating synthetic voice recordings that mimic someone’s speech patterns and intonations. These can be used to create fake audio messages or mimic someone’s voice to deliver a dialogue the original voice never spoke themselves.

Gesture and body movement manipulation → Deep learning techniques can alter body movements, gestures, and expressions in videos making it seem like a person is doing or saying something they didn’t (e.g. the Modi dance video viewed by millions).

Object manipulation → In this form of deepfakes, objects are manipulated within images or videos, changing their appearance or behavior. This could range from “fake” product placement (e.g. a Coke bottle appearing on the grounds of The Masters where it did not previously exist) to something more manipulative (imagine a weapon photoshopped into a crime scene ex post facto).

What Can We Expect Going Forward?

With advances in AI, we are seeing deepfakes flood every sphere of life.

Geopolitical

As mentioned above, the Indian elections proved to be a hotbed for deepfake activity. Bollywood stars like Aamir Khan and Ranbir Kapoor were falsely shown to be criticizing certain political figures. Videos of top candidates were overlayed with digital lip syncing to create the impression they were voicing opinions they actually weren’t (or in some cases completely opposite their actual message).

Interestingly, some candidates actually paid services to digitally alter themselves. Wired has a great story about candidates using a firm called Polymath Synthetic Media Solutions to create deepfakes of THEMSELVES in an effort to issue personalized messages to hundreds of thousands of different constituents.

The current US Presidential campaigns for the upcoming November elections are also riddled with deepfakes, particularly given the highly digital campaigns both sides are running. AI-created images on X falsely depicting President Biden in military attire, a video alteration making Vice President Kamala Harris appear inebriated and nonsensical, and even a PAC-supported ad misusing AI to replicate Donald Trump's criticism of Iowa Governor Kim Reynolds have become prominent.

Corporate

Deepfakes are increasingly used for fraudulent purposes at the corporate level as well. In February this year, a finance worker at the engineering company Arup was tricked into paying $25M to fraudsters posing as the company’s CFO in a video conference call.

The elaborate scam saw the worker duped into attending a video call with what he thought were several other members of staff, but all of whom were in fact deepfake recreations, Hong Kong police said at a briefing on Friday.

Chan said the worker had grown suspicious after he received a message that was purportedly from the company’s UK-based chief financial officer. Initially, the worker suspected it was a phishing email, as it talked of the need for a secret transaction to be carried out.

However, the worker put aside his early doubts after the video call because other people in attendance had looked and sounded just like colleagues he recognized, Chan said.

Believing everyone else on the call was real, the worker agreed to remit a total of $200 million Hong Kong dollars – about $25.6 million, the police officer added.

What’s remarkable about this deepfake is that Arup likely has many enterprise security tools in place (e.g. network, endpoint, and email security) but none of these existing checkpoints could protect against a human employee believe they needed to make a “verified” transaction.

Consumer

It’s not just political candidates, famous celebrities, or wealthy corporations that are targets for deepfakes, but increasingly the average consumer as well. According to survey conducted by the identity verification company Jumio, 72% of consumers “worry on a day-to-day basis about being fooled by a deepfake into handing over sensitive information or money”. Only 15% say they’ve never encountered a deepfake video, audio, or image before.

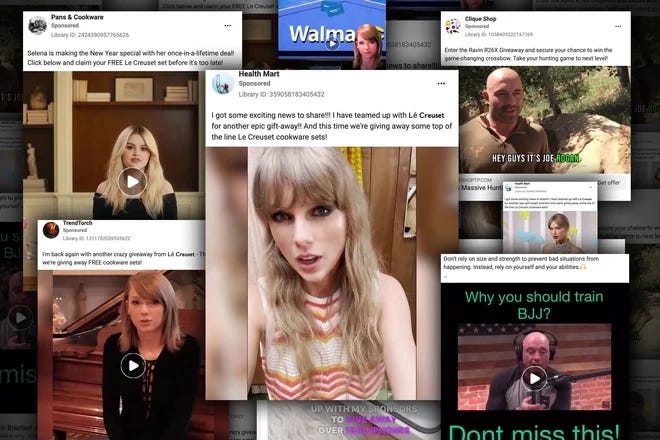

Deepfakes can be employed to target consumers for everything from fraudulent product marketing (e.g. Taylor Swift peddling Le Creuset cookware), investment hoaxes (e.g. a deepfake of Elon Musk promoting a fake crypto platform) and regular old-fashioned identity fraud.

The term “buyer beware” is taking on a new meaning in this AI era…

So What Are We Doing About It?

With advances in AI making digital manipulation cheaper and easier to do, there will need to be an equally strong effort in combating the spread of deepfakes. We’ve seen a number of new companies arise in this emerging category like Clarity, Reality Defender, IdentifAI, and PolyguardAI, which are all taking slightly different angles to combat the security threat around deepfakes. These companies are themselves typically using AI (fighting fire with fire, or AI with AI!) to detect what is real vs. what has been digitally manipulated (e.g. by identifying inconsistencies in facial expressions, movements, etc.). Ultimately these companies will attempt to verify deepfakes across all modalities including voices, images, and videos.

Another terrific company in the deepfake detection space is TrueMedia.org. TrueMedia is a non-profit, non-partisan, and free tool founded by Dr. Oren Etzioni who was the Founding CEO at the Allen Institute for AI (and a venture partner at Madrona!). With TrueMedia, anyone can add a social media post with a video, audio, or image, and quickly get aggregated results from dozens of AI detectors to verify if the content was real or deepfaked. Their outstanding work has been featured in the NYTimes, PBS, CBS, and Fortune.

Since 2019, several states and the federal government have attempted to pass legislation aimed at the use of deepfakes. These laws do not apply exclusively to deepfakes created by AI, but more broadly apply to deceptive manipulated audio or visual images, that falsely depict others without the person’s consent. We believe there could be more regulation that is passed that directly impacts deepfakes, particularly as it relates to online impersonation done with an intent to intimidate, bully, threaten or harass a person. There has been more pressure on the issue given the ease of use around creating these deepfakes via AI.

By no means are deepfakes a new threat vector, but they certainly have become very top of mind for organizations, companies, celebrities, and individuals alike. We are in the early innings of how this space is evolving in the era of AI. As a result, it’s hard to say what the ‘right’ methodology will be for protecting against deepfakes.

Ultimately all of us will bear some responsibility in double-checking images and videos shared with us, and remain skeptical about things that appear “too good to be true.”

In early 2021, this deepfake creation of Tom Cruise may have taken “weeks of work” leveraging the open-source DeepFakeLab algorithm, but how long would it take today with all the advancements in AI?

Be careful about what you see out there, as it may not be real!

We hope you enjoyed this edition of Aspiring for Intelligence, and we will see you again in two weeks! This is a quickly evolving category, and we welcome any and all feedback around the viewpoints and theses expressed in this newsletter (as well as what you would like us to cover in future writeups). And it goes without saying but if you are building the next great intelligent application and want to chat, drop us a line!