Grading Our 2024 Predictions

Our annual year-end tradition of seeing what we got right, and where we were way, way off

Please subscribe, and share the love with your friends and colleagues who want to stay up to date on the latest in artificial intelligence and generative apps!🙏🤖

We’re just a few weeks away from the end of 2024, which means it’s time for our annual tradition of looking back and grading our predictions for the year.

In 2022, Generative AI went from exploding into the public consciousness to going “mainstream” in 2023. In 2024, AI has embedded itself as an everyday topic, permeating everything from boardroom conversations to Thanksgiving dinners and even the US election. In some circles, ChatGPT went from “revolutionary” to “annoying” in just two years, and the proclamations that we are in an “AI bubble” are only getting louder.

We firmly believe that we are still in the early innings of the AI revolution, and we should only expect things to continue to get even crazier moving forward. This means more innovation, better models, steeper funding rounds for the “deemed winners”, and likely a further expansion of the AI bubble. On the flip side, we will also likely see many early AI startups begin to run out of cash and falter, moats erode, and consolidation in the category. And through it all, we will have a front-row view of the Elon vs. Sam battle :)

However, one thing that will not change is that there will continue to be plenty of “unknown unknowns” that we cannot even begin to foresee today. Of course that will not stop us from making predictions anyway.

So let’s begin the fun of grading ourselves for last year’s predictions!

In December 2023, we made five predictions for what we expected to happen in 2024 (you can read the entire post here):

Open Source Models Eclipse Closed Source

Apple Awakens (in AI)

Multimodality Becomes The Norm

Agent to Agent Interactions Increase

The ROI Question Becomes Real

Let’s go through each one by one:

Open Source Models Eclipse Closed Source

Did it happen? No

In 2023, open-source models like Llama-2, Mistral AI, Falcon 180B, and EleutherAI stepped into the spotlight and garnered significant attention from developers and AI technologists. Many of these models performed very well against their closed-source peers, topping benchmarks and eliciting rave reviews. We expected that in 2024, open-source models would continue to proliferate and the benefits of customization, transparency, and lower costs would eclipse closed-source models.

However, that wasn’t the case!

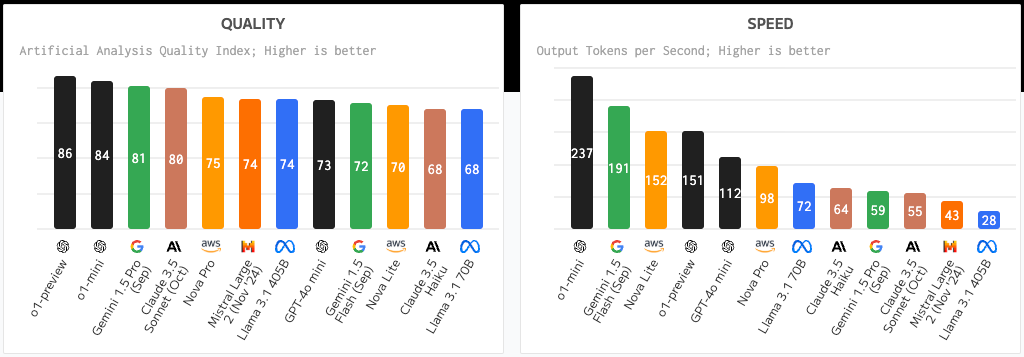

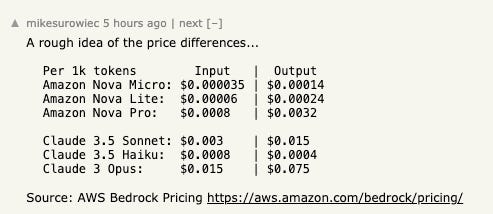

This year we saw a surge in high-performing closed-source models like GPT-4o and o1-preview from OpenAI, Claude 3.5 Sonnet and Haiku from Anthropic, Gemini 1.5 from Google, and more recently the Nova family of models from Amazon. Generally these models have outperformed Mistral Large 2 and Llama 3.1 on quality and speed per Artificial Analysis (see below for a recent ranking).

So what happened? Large platforms like OpenAI, Anthropic, and Google continued to invest billions of dollars into their models and attract the best and brightest researchers (often from each other) to make significant proprietary advances that open-source hasn’t yet been able to match. Importantly, the cost of serving models continues to drop precipitously. For example, Amazon’s Nova is significantly cheaper than Claude 3.5 Sonnet and Haiku while offering comparable context windows and performance. As costs for proprietary models continue to drop, the difference between it and free open-source models will become negligible.

Apple Awakens (in AI)

Did it happen? Kind of

Last year, Apple was notoriously absent from the list of BigTech names to throw their hats in the AI ring. While Google, Amazon, Meta, and Microsoft each launched their foundation model or significant AI partnership, Apple had yet to announce anything significant. We assumed that would change in 2024.

It did change, but also not really.

During WWDC 2024 in June, Apple announced its spin on AI, branding it “Apple Intelligence”. Apple Intelligence is a “personal intelligence system…comprised of multiple highly-capable generative models that are specialized for our users’ everyday tasks, and can adapt on the fly for their current activity.” Essentially this AI would be deeply embedded into Apple products and applications and allow users to utilize AI across everything from writing tools inside Mail to generating images on-device within Playground.

However, the “intelligence” was mostly a dud.

While Apple Intelligence was included in a free iOs 18.1 update released in October, the features have been slow to roll out and largely “unremarkable”. Per Wirecutter:

Several of the Apple Intelligence features that Apple showed off earlier this year, including the ability to generate emojis and images with AI as well as the iPhone’s ChatGPT integration, aren’t included in iOS 18.1, and what is included Apple calls beta features, so the tools aren’t fully polished.

For example, features like Proofread, Rewrite, Summary, and Photo Clean-up are not fully featured (e.g. the AI photo editing tool allows only removing, not adding, objects) and have been deemed “half-baked”, likely as Apple wants to avoid any bad headlines that have plagued its competitors.

It’s certainly not what we’ve come to expect of Apple releases. Perhaps in 2025 we will see the true promise of Apple Intelligence: AI powering every application and product we use on-device.

Multimodality Becomes The Norm

Did it happen? Kind of

Multimodality refers to the ability of models to process and integrate information from multiple types of data or sensor inputs simultaneously, including text, images, audio, video, and other mediums. In September 2023 OpenAI proclaimed that “ChatGPT can now see, hear, and speak”, referring to new voice and image capabilities being rolled out in ChatGPT. Our feeling at the time was that we would begin to see multimodality take hold across other models and non-text based communication would be more pronounced.

That trend is moving in the right direction, but text remains the dominant form of communication with models.

Interestingly, we are starting to see sparks of growth in audio-based communication with models. ElevenLabs just released a conversational AI builder, allowing developers to “build AI agents that can speak in minutes with low latency, full configurability, and seamless scalability”. LiveKit has made significant strides in powering audio and video between LLMs and their users (in fact LiveKit is what powers ChatGPT’s voice capabilities).

Like Aaron Levie, we’re excited for a future where human-to-agent interactions will happen through both voice and text!

Agent to Agent Interactions Increase

Did it happen? No

While there has been significant progress in AI agent development over the last year, the seamless, autonomous collaboration between agents remains more theoretical than practical. Our colleague Jon Turow wrote about how AI Agents are still "Stuck in First Gear" earlier this year. We have continued to witness most agentic activity requiring human oversight or direct interaction with users.

Several hurdles have slowed the adoption of agent-to-agent interactions:

From a technical hurdle perspective: Perhaps the most important issue here is security. There remains a lack of standardized and secure ways for agents to access tools, applications, and systems needed to perform tasks on behalf of users. Without robust access protocols and safeguards, granting agents free rein remains risky and impractical. Some use cases where the human provides the agent credentials upfront to complete a specific task (e.g., log in to my Doordash account), but gaining master control access (e.g., OnePassword or Okta) remains risky, even more so in the business context. Other important technical issues to address revolve around memory, orchestration, understanding user intent, and abiding by guardrails while still having autonomy to act. Current agentic capabilities in these areas are nascent, but we are seeing more memory persistence and chain-of-thought reasoning techniques emerging to combat this.

Ecosystem Limitations: Agent-to-agent interactions require widespread adoption of agents across individuals and companies. This remains far from reality, as many humans and organizations are hesitant to integrate agents deeply into their workflows due to privacy concerns and other technical limitations. The lack of agentic infrastructure for a unified ecosystem or set of standards to enable inter-agent communication also remains a challenge. Without these, the cost and complexity of building interoperable agents remain high and reliant on humans.

We remain excited about the vision of agents seamlessly collaborating on behalf of users, resulting in agent-to-agent interactions, but realizing this vision will require continued innovation.

The ROI Question Becomes Real

Did it happen? Yes

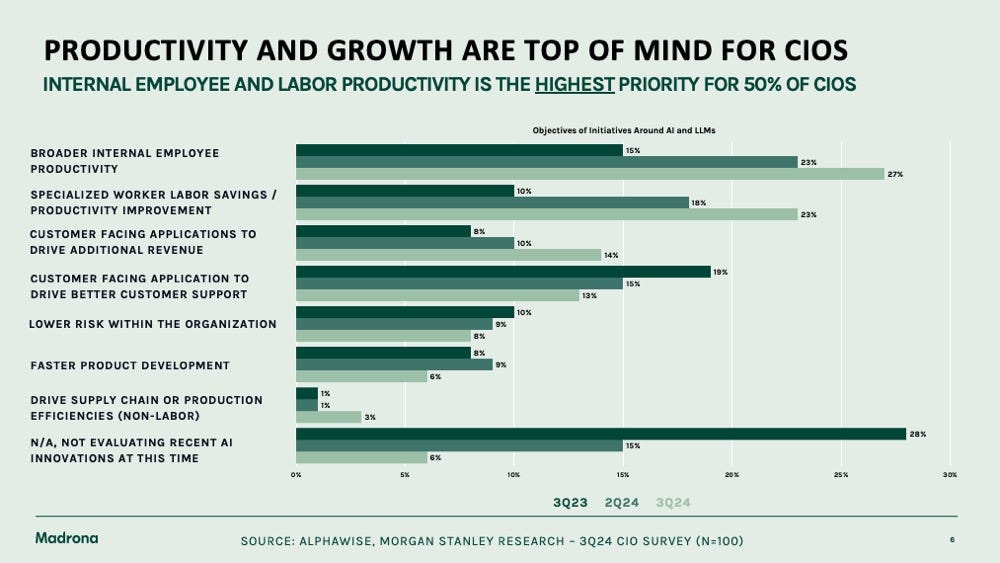

Throughout the year, CIOs consistently emphasized the need to demonstrate ROI. While budgets remain for trialing AI products, renewals are reserved for those delivering tangible value. At the Madrona 2024 Intelligent Apps and CIO Summits, leaders from Goldman Sachs, Delta, Microsoft, and others highlighted the critical importance of measurable outcomes to justify AI investments. Some key insights we heard:

Shift to Measurable Value: Companies are moving beyond experimentation to integrating AI into mission-critical tasks that directly impact productivity and growth. CIOs are prioritizing use cases like productivity tools (e.g., GitHub Copilot, Zoom AI Companion) and customer service enhancements.

ROI Challenges: The economic climate has sharpened the focus on proving AI’s value. Many enterprises delayed AI projects due to high costs, limited business use cases, and unproven ROI. However, examples like Accenture's use of GitHub Copilot demonstrated tangible benefits, such as increased developer productivity (93% code adoption) and fulfillment.

AI Product Education: For startups, success lies in building scalable AI solutions that solve specific pain points while demonstrating clear ROI. But, because AI solutions are still very nascent, startups must educate enterprises on AI's potential and guide them through adoption complexities.

Conclusion

As 2024 comes to a close, it’s clear that the AI landscape continues to evolve at a rapid pace. This year did bring several unexpected developments: Anthropic’s rapid rise with its Claude models, significant advances in AI-generated code beyond Microsoft’s Copilot system (e.g., Cursor, Codeium, Bolt.new), and a notable decrease in the cost of models (e.g., cost of running GPT models has decreased by nearly 90% in the last 18 months). Smaller models are gaining relevance, and the conversation has shifted pre-training to post-training.

One thing that remains unchanged is the pace of development in AI grows unabated, and it’s difficult to foresee what will happen in one month let alone one year.

So with that being said, stay tuned for our next post of the year where we make predictions for 2025.

We hope you enjoyed this edition of Aspiring for Intelligence, and we will see you again in two weeks! This is a quickly evolving category, and we welcome any and all feedback around the viewpoints and theses expressed in this newsletter (as well as what you would like us to cover in future writeups). And it goes without saying but if you are building the next great intelligent application and want to chat, drop us a line!