DevDay!

Key learnings and takeaways from OpenAI's inaugaral developer day

Thanks to our now 1.6K+ subscribers! Please continue to subscribe and share with colleagues, friends, or anyone you think would enjoy staying current on the latest trends in AI :)

OpenAI hosted its first Developer Day in San Francisco on Monday. With an aura and lead-up rivaling that of even Apple’s famous WWDC, OpenAI did not disappoint, announcing several major milestones, new products, features and enhancements. While in the past few months many industry analysts were bemoaning waning interest in ChatGPT, and rival AI companies like Anthropic and Adept were drumming up vast sums of money, OpenAI again threw down the gauntlet and reinforced their identity as the dominant product company in AI. And even Satya Nadella made an appearance!

The opening keynote led by CEO Sam Altman is definitely worth watching, but in case you missed it, we’ve summarized the key announcements from DevDay, and more importantly our view on the implications for the AI startup ecosystem below.

Let’s dig in.

Key Announcements

In our view the centerpiece of DevDay centered around the release of OpenAI’s new model, GPT-4 Turbo, and the announcement of the new creator tools for assistants called “GPTs” which will be featured on OpenAI’s GPT store.

GPT-4 Turbo

This is OpenAI’s newest model that boasts a number of key features, including

Increased Context Length - Increased to 128K Context Window (GPT 3.5 offers a 16K Context Window).

More Control of Responses and Outputs - Improved instruction following with JSON mode and more reproducible outputs, and parallel function calling.

Better World Knowledge - Previously GPT 3.5 only had knowledge up to September 2021, but GPT-4 Turbo will now have knowledge up to April 2023

New Modalities - Updates to DALLE-3, TTS, and Whisper AI. DALLE-3 now supports the ability, given a prompt, to create a new image. TTS converts text to natural-sounding text. Whisper is available via API with optimized inference processing, increasing speed.

GPT-4 Finetuning API - Adapting models and custom models with domain-specific pre-training.

Copywrite Shield - Copywrite safeguards around OpenAI systems to prevent copywriting, plagiarism and wrongful use of IP.

Building “GPTs” & The GPT Store

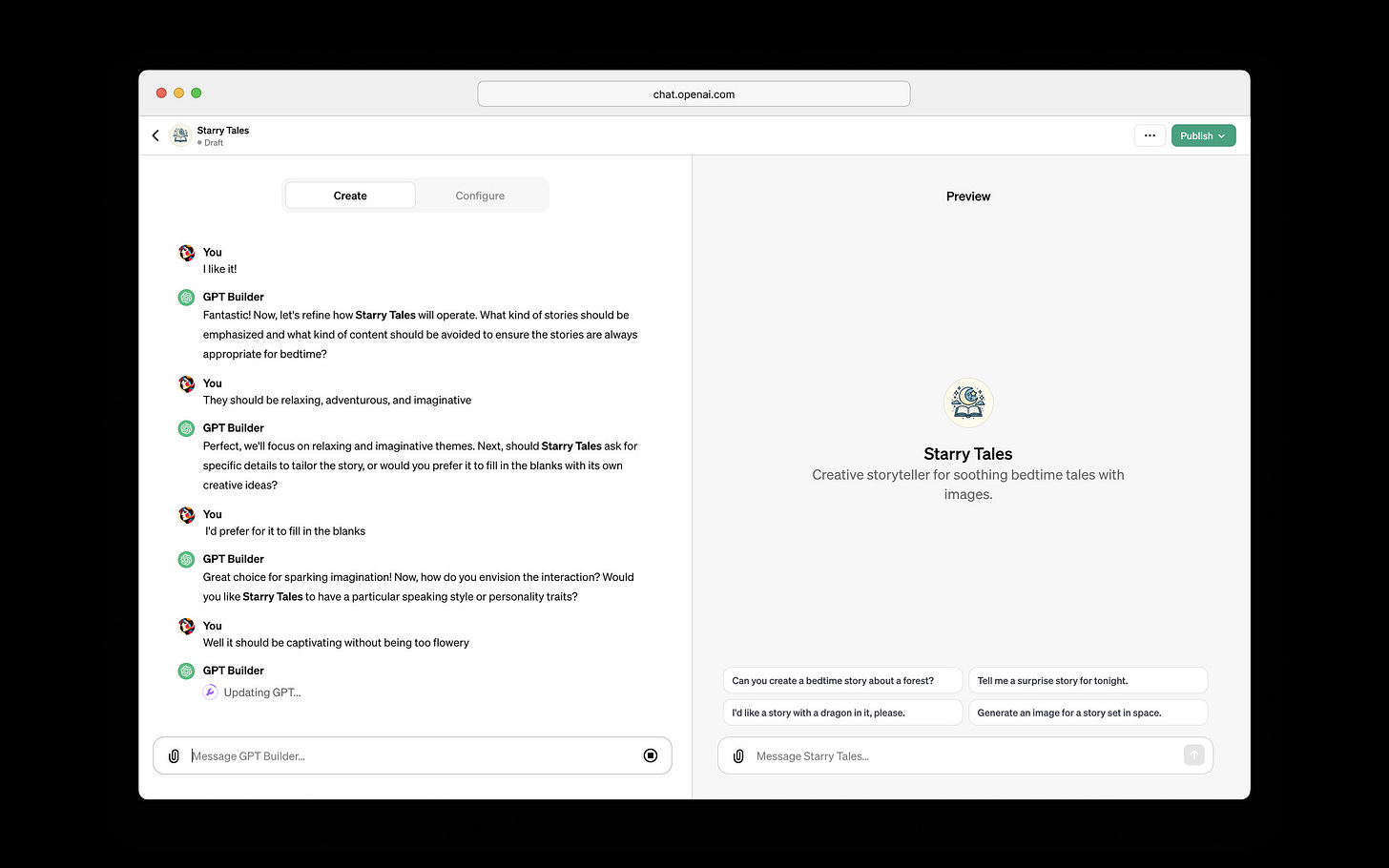

GPTs leverage GPT-4 Turbo and are tailored versions of ChatGPT that are designed for specific tasks. These GPTs can be published for others to use (within an organization or publicly) in the GPT Store. We thought this was the most interesting and strategic announcement for OpenAI.

GPTs - OpenAI describes GPTs as the following - “GPTs are a new way for anyone to create a tailored version of ChatGPT to be more helpful in their daily life, at specific tasks, at work, or at home—and then share that creation with others. For example, GPTs can help you learn the rules to any board game, help teach your kids math, or design stickers.” GPTs are designed to be built in natural language allowing anybody, even non-devs, to play around with creating new GPTs. Canva and Zapier AI examples.

GPT Store - OpenAI’s GPT Store allows developers and users to publish different apps directly on the GPT Store and is building in a revenue share agreement where OpenAI pays them a portion of the revenues. OpenAI’s GPT store ultimately serves as as distribution platform for developers to create and build new GenAI apps.

In many ways, the GPTs felt like a new spin on OpenAI’s original announcement of “plug-ins”.

Other noteworthy:

Pricing - GPT-4 Turbo is considerably cheaper for prompt tokens. Sam boasts about the pricing during the keynote describing how “GPT-4 Turbo input tokens are 3x cheaper than GPT-4 at $0.01 and output tokens are 2x cheaper at $0.03”. The updated pricing can be found here.

Assistants API - Allows you to build AI assistants within your own applications and leverage models and tools to respond to user queries. More on that announcement here.

OpenAI Business Model(s) & Key Considerations:

It’s clear coming out of OpenAI Dev day that OpenAI’s business can really be broken out into two main business lines 1/ Research and Access to Frontier Multi-Modal Models and 2/ “GPT” - we encompass this as ChatGPT and the newly associated GPTs. These two business lines, while widely different in distribution and monetization, are intertwined closely and both play a critical role in OpenAI’s overall success.

Frontier Models (GPT-4, GPT-4 Turbo, DALLE). Today, OpenAI offers a wide range of access to their frontier models via API. These frontier models are targeted at the developers who want to build applications on top. OpenAI’s new releases around their GPT-4 Turbo model incentivize developers to stay on their platform as opposed to looking for other toolkits and solutions. OpenAI’s launch of the GPT store is a strategic move to capture the developer audience as it allows them to monetize their work, keeping them more within the OpenAI model ecosystem.

ChatGPT & GPTs: ChatGPT initially launched in November 2022 and by January 2023 had over 100 million active users. ChatGPT was a brilliant way for OpenAI to gain a share of the individual consumer while fundamentally showcasing the power of their frontier models. ChatGPT in many ways is the “AGI application” whereas the newly launched "GPTs” are “vertical-specific applications”. The GPT store also enables an entirely new audience of non-technical users to try to build GPTs and monetize on them as well.

Conclusion: So What does this all mean for the ecosystem?

Invariably the common refrain coming out of DevDay (at least on X) is “how many AI startups has OpenAI killed?”. Even blog posts were being written mere moments after DevDay ended!

However, we believe this is a kneejerk reaction and doesn’t accurately depict what we think will happen in the market. In fact, it wasn’t too long ago that there was a similar reaction from every attendee of AWS re:Invent, where every time AWS would flash up one of their newest products, a collective groan could be heard from founders thinking it was the end of their road.

But that didn’t end up being the case!

Despite AWS having offerings in every part of the cloud infrastructure stack, large independent companies in those sectors do exist! Just look at databases (MongoDB, Redis, Elastic); data warehouses & analytics (Snowflake, Databricks); networking (Cloudflare, Fastly); etc. So despite AWS churning out incredible products every year, thought to be the death-knell of startups building in those spaces, its clear today that those markets were large enough for several $1B+ companies to exist.

Where are the Opportunities?

Easier to be a “Micro-Entrepreneur”→ Neal Khosla, the cofounder of Curai, made a great point that OpenAI’s new features make it much easier for individuals to become “micro-entrepreneurs” who can build interesting businesses without requiring massive gobs of VC money. Just as AWS made it easier for startups to scale by abstracting away storage, compute, and infrastructure complexities, OpenAI’s “App Store for AI” can help cash-strapped founders use AI in place of manual human work.

Open Source → As dominant as OpenAI is, GPT remains a closed-source model and the limitations that come with that (not being able to tune the weights, control, etc.). As we wrote about in our last piece “Open (Source) Sesame”, there are a number of advantages to building with open-source models. Startups looking to compete with OpenAI may find more success down the open-source path.

Security and Orchestration → If anyone can use OpenAI to build custom models and their own GPT agents, we’re going to end up in a world with millions of new apps, agents, and machines. Our sense is that will inevitably open up more security gaps and vulnerabilities requiring protection. There will likely be an opportunity for a new company to be built serving as the security and orchestration layer for an “Agent World”.

Serve as the “Switzerland” → AI startups can become a “Switzerland”. We believe the future will be made up of “model cocktails” (i.e. we are not going to have just one model that developers use, but rather developers will leverage best in class models - both closed source and open source - to execute on specific tasks). As a result, we believe AI startups specifically focused on the tooling and middleware layers will still play critical roles in the ecosystem as they will be friendly with all the model providers.

OpenAI’s DevDay has been examined and recapped to death, and for good reason. With the world of AI (and the broader tech industry) watching closely, OpenAI met and exceeded the bar for showing what they are capable of. It’s only a matter of time before they reach the vaunted status of generational companies like Microsoft, Amazon, Google, and Apple…all companies that at one time were thought to be “startup killers” but in many cases enabled a new generation of startups to succeed.

We hope you enjoyed this edition of Aspiring for Intelligence, and we will see you again in two weeks! This is a quickly evolving category, and we welcome any and all feedback around the viewpoints and theses expressed in this newsletter (as well as what you would like us to cover in future writeups). And it goes without saying but if you are building the next great intelligent application and want to chat, drop us a line!

I appreciated your take on the opportunities in the near term. I wonder what your perspective is on the next step of intelligent agents composing workflows from these applications? I wrote a piece on it this week, and BillGs latest note also seems to extrapolate to that scenario. Timeframe is the biggest question. https://www.theshepreport.com/p/the-beginning-of-the-end-of-applications