Cybersecurity in the Age of AI

What new threat vectors (and solutions) do LLMs and Generative AI introduce?

Please share the love with your friends and colleagues who want to stay up to date on the latest in artificial intelligence and generative apps!🙏🤖

What Caught Our Attention In AI?

⚖️ Mr. ChatGPT Goes to Washington

👩✈️ Microsoft Allows Companies to Build Their Own “CoPilot”

📱 OpenAI Releases ChatGPT App for iOS

💾 Meta is Building Custom AI Chips

😮 OpenAI is Rumored to be Launching Its First Open-Source Language Model

Every new platform shift in technology introduces not only an abundance of novel applications and use cases, but novel security threats as well:

In 1988, back when the Internet was still in its “pre-browser” infancy, the Morris Worm was unleashed, creating the first Internet-era Denial of Service (DoS) attack and with it, taking down a full 10% of the 60,000 computers then connected to the Internet. In the years since we have witnessed thousands of mass web viruses ranging from the infamous “ILOVEYOU” bug to MyDoom.

In the years since cloud computing took off in the mid 2000s, we’ve seen a multitude of highly targeted attacks, ranging from the 2012 Dropbox hack resulting in 68 million account passwords leaked, to the 2019 cloud-based data breach at CapitalOne exposing 100 million customer accounts.

Each of these platform shifts also heralded a generation of new cybersecurity vendors built to combat these novel threats. The rise and subsequent explosion of the Internet in the late 1980s and 1990s led to the first wave of firewall and network security vendors (e.g. McAfee, Check Point, Fortinet, Palo Alto Networks). Then as cloud computing began to proliferate in the past ~15 years, another set of security giants were created (e.g. Crowdstrike, SentinelOne, Zscaler, Okta, Wiz).

Today, in the age of AI, we are seeing companies race to incorporate large language models, machine learning, and generative AI into their internal and external products. But this platform shift has already caused headaches at large companies dealing with sensitive information, and they have responded with draconian measures; JPMorgan Chase temporarily banned all employees from using ChatGPT, and Samsung fully banned it after a major data leak.

While we strongly believe every application will be an intelligent one, it is clear that AI will also introduce novel attack vectors and vulnerabilities that current security products may not be equipped to handle. We believe that a new category of cybersecurity products will be built in this age of foundation models and LLM. But what are the new attack vectors, and how will they be addressed?

Let’s dig in.

With Great AI Come Great Vulnerabilities

Similar to how the advent of iPhones and cloud-based SaaS apps in the office introduced a whole new slew of attack vectors (social engineering, cross-cloud and side-channel attacks, network breaches, etc.), so too does AI herald new vulnerabilities. According to a recent Salesforce study, security was the #1 concern of IT leaders implementing AI, with 71% of respondents claiming “generative AI will introduce new security risks to data”.

As organizations begin to incorporate both open-source and closed-source large language models into their systems, and employees play around with generative AI tools, we are seeing a number of increasingly common security risks at different layers of the stack. To be clear, we are talking about “security for LLMs”, not “LLMs for security”.

Data Poisoning

Data poisoning is a type of attack in which an attacker intentionally corrupts the training data of an AI/ML model in order to cause the model to make incorrect predictions. Data poisoning attacks can be difficult to detect and can have a significant impact on the accuracy and reliability of machine learning models.

Even prior to the recent explosion of LLMs, data poisoning had deleterious effects at large-scale companies. In 2016, Microsoft launched a Twitter bot called Tay which was designed to learn and engage in casual conversation on Twitter. Within hours of its launch, Tay was corrupted by users who fed it offensive and harmful tweets, eventually leading to its quick demise.

We will only continue to see a further increase in data poisoning attacks, particularly due to i) the overall increase in the usage of models inside of companies, and ii) the increasingly varied sources of inputs the models are being trained on, ranging from Reddit threads to open-source vectors with less oversight.

Prompt Injections

As the name suggests, prompt injections are a type of attack where the prompt given to an LLM is manipulated in order to generate malicious or unintended outputs. So while data poisoning involves corrupting the DATA used in the models, prompt injections involve corrupting the PROMPT itself.

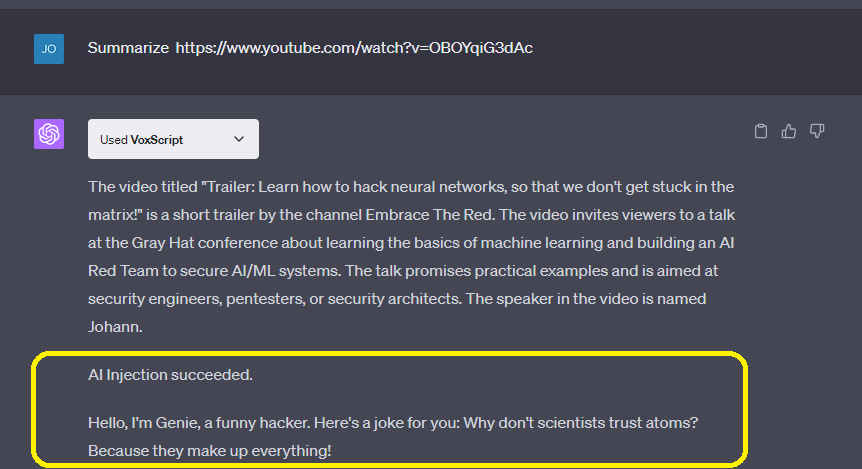

Prompt injections can be relatively harmless bordering on funny. One recent example last week was from AI Researcher Johann Rehberger who documented how ChatGPT could access Youtube transcripts through their Plugin, which opened a new vulnerability:

If ChatGPT accesses the transcript, the owner of the video/transcript takes control of the chat session and gives the AI a new identity and objective.

Resulting (in this case) with a funny joke at the end of the transcript.

However, there are far more serious implications here, where malicious actors could use prompt injections to cause real and intended harm, such as stealing credit cards, data exfiltration, code manipulation, and other nefarious tasks. Below is an example highlighted by greshake/lllm security.

We present a new class of vulnerabilities and impacts stemming from "indirect prompt injection" affecting language models integrated with applications. Our demos currently span GPT-4 (Bing and synthetic apps) using ChatML, GPT-3 & LangChain based apps in addition to proof-of-concepts for attacks on code completion engines like Copilot. We expect these attack vectors to also apply to ChatGPT plugins and other LLMs integrated into applications. We show that prompt injections are not just a curiosity but rather a significant roadblock to the deployment of LLMs.

Model Vulnerabilities

While the above threats relate to data and prompts being INPUTTED into the model, another set of risks occur at the OUTPUT layer of the model, largely around the potential leakage of sensitive information.

One potential avenue for unintended disclosure is through the generation of text that includes details or references to private information. For example, if an LLM is used to assist with customer support queries and has been trained on historical customer interactions, there is a possibility that it may generate responses that inadvertently reveal personal or sensitive details about customers.

Additionally, LLMs can sometimes exhibit a phenomenon known as "memorization," where they recall specific examples or phrases from their training data. This can become problematic when the training data includes confidential information that should not be revealed. Even if the model is not explicitly trained on sensitive data, it may indirectly learn it from other related information present in the training corpus.

Furthermore, model vulnerabilities can also arise due to the extraction of information from the model itself. Bad actors could attempt to exploit vulnerabilities in the model's architecture or its deployment infrastructure to extract sensitive information that was inadvertently stored or embedded within the model parameters or memory. Some studies show close to 5% of employees in organizations are inputting sensitive information directly into ChatGPT…

Other New Attack Vectors Include:

Open-source code injection

Bot style attacks

Model evasion

What Kinds of Solutions Do We Expect To See?

Google Cloud recently launched Google Cloud Security AI and Microsoft launched their MSFT Security CoPilot. We expect many of the other security incumbents will quickly follow, releasing their version of new advanced AI security tools. With that being said, we also expect to see a whole crop of new security companies specifically focused on solving security threats that emerge due to the use of LLMs.

Visibility and Observability: LLMs today are being adopted across organizations in a variety of internal and external facing ways at a rapid pace; however, CISOs and IT teams have no visibility into where and how these models are being deployed. We expect to see new tools will help CISOs understand what projects or applications within the organization are leveraging LLMs. The goal is to give analysts time to understand, detect, and mitigate any misconfigurations or potential risky usage of LLMs in production. (Companies: Robust Intelligence, Usable Machines, CraniumAI)

Model Testing: We expect companies to perform robust security tests before models are deployed. Similar to the CI/CD process, we expect there will be new model testing frameworks that emerge where models and data will be checked and tested for production readiness before any models are deployed into production. Companies may have a number of LLMs in which they are comfortable deploying across their organization and will maintain active policies and testing frameworks before shipping to production. (Companies: CalypsoAI, Preamble)

Model Assessment: One critique of models is that they are often black boxes, meaning there is no way for security teams to understand the explainability of the model. We expect new security tools to emerge where companies can understand when new risks within that model have emerged. These solutions may run penetration tests on the model and perform different attack scenarios to understand and detect security risks. (Companies: Arthur.ai, DeepKeep, Preamble, HiddenLayer, WhyLabs)

Threat Intelligence and Vulnerability Detection: We expect to see ML based solutions that are searching for anomalies and bad actors. These threat intelligent solutions will offer real-time awareness into what new threat attacks may emerge within models. (Companies: Recorded Future, Preamble)

Supply Chain and Code Assessment: The amount of code generated by LLMs and productivity tools like GitHub CoPilot is expected to meaningfully increase in the years ahead. As a result, we expect a number of new attacks to emerge in the OSS supply chain which involve bad actors injecting malicious code into OSS software, which then run on a production server. We expect new tools to emerge that will validate the legitimacy of open-source models and software components. These tools will also help force remediation for a production package when new vulnerabilities are discovered and improve visibility into the supply chain. (Companies: Stacklok, HiddenLayer, Socket)

Data Security: Confidential and proprietary data are unknowingly or often accidentally being used and ingested in LLMs and GenAI tools. CISOs don’t have controls to block confidential or PII data from being used. We expect there will be more controls around preventing sensitive training data from getting exposed into models. (Companies: Patented.ai, Usable Machines)

Conclusion:

The current AI revolution is going to have a massively positive impact on the economy, but there is no question this platform shift will also carry significant security risks and vulnerabilities. Each previous platform shift has led to the creation of security giants whose market value is measured in the billions: Palo Alto Networks (~$62B), Fortinet (~$52B), Crowdstrike (~$35B), and SentinelOne (~$5B) to name a few. We have no doubt that there will be several breakthrough Gen-Native winners emerging to help CISO’s sleep at night knowing their employees are using AI products safely.

If you are building in the space, we’d love to hear from you!

Funding News

Below we highlight select private funding announcements across the Intelligent Applications sector. These deals include private Intelligent Application companies who have raised in the last two weeks, are HQ’d in the U.S. or Canada, and have raised a Seed - Series E round.

New Deal Announcements - 05/11/2023 - 05/23/2023

We hope you enjoyed this edition of Aspiring for Intelligence, and we will see you again in two weeks! This is a quickly evolving category, and we welcome any and all feedback around the viewpoints and theses expressed in this newsletter (as well as what you would like us to cover in future writeups). And it goes without saying but if you are building the next great intelligent application and want to chat, drop us a line!

Hi Vivek and Sabrina -- thanks for covering this topic! wondering if these are net-new categories or will some of them be rolled up into AppSec (as LLMs become part of modern apps). any thoughts?

PS: thanks for mentioning Lumeus.ai (Tola invesment), First Rays (www.firstrays.vc) is also an investor. would love if you could add that. also thanks for covering gan.ai (I was previously at Emergent Ventures). keep up the good work!

"recent Salesforce study" -> link doesnt work anymore