Can Anything Stop Nvidia?

A deeper look into the juggernaut underpinning the AI revolution

Please subscribe, and share the love with your friends and colleagues who want to stay up to date on the latest in artificial intelligence and generative apps!🙏🤖

On Wednesday, the tech and broader financial world waited with bated breath for 4PM ET to arrive. Not just for the usual time when markets close, but also because Nvidia would announce its Q4 and FY’2024 earnings. This was perhaps one of the most anticipated earnings announcements to happen in recent memory; Goldman Sachs’s trading group went as far as to call Nvidia the “most important stock on planet earth”.

Despite sky-high expectations, Nvidia delivered.

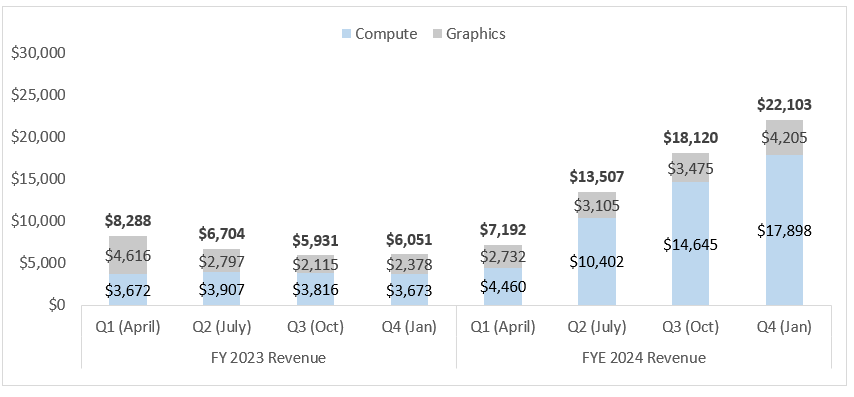

Annual revenue grew 265% (!) YoY

Data center revenue in Q4 grew a whopping 5x YoY to $18.4B

Gross margins increased 16% from 57% to 73% for FY’24

$11B of free cash flow was generated in the quarter, up from $1.7 billion of cash generated in the year-ago quarter

And revenue projections grew to $24B for the upcoming quarter, surpassing analyst expectations

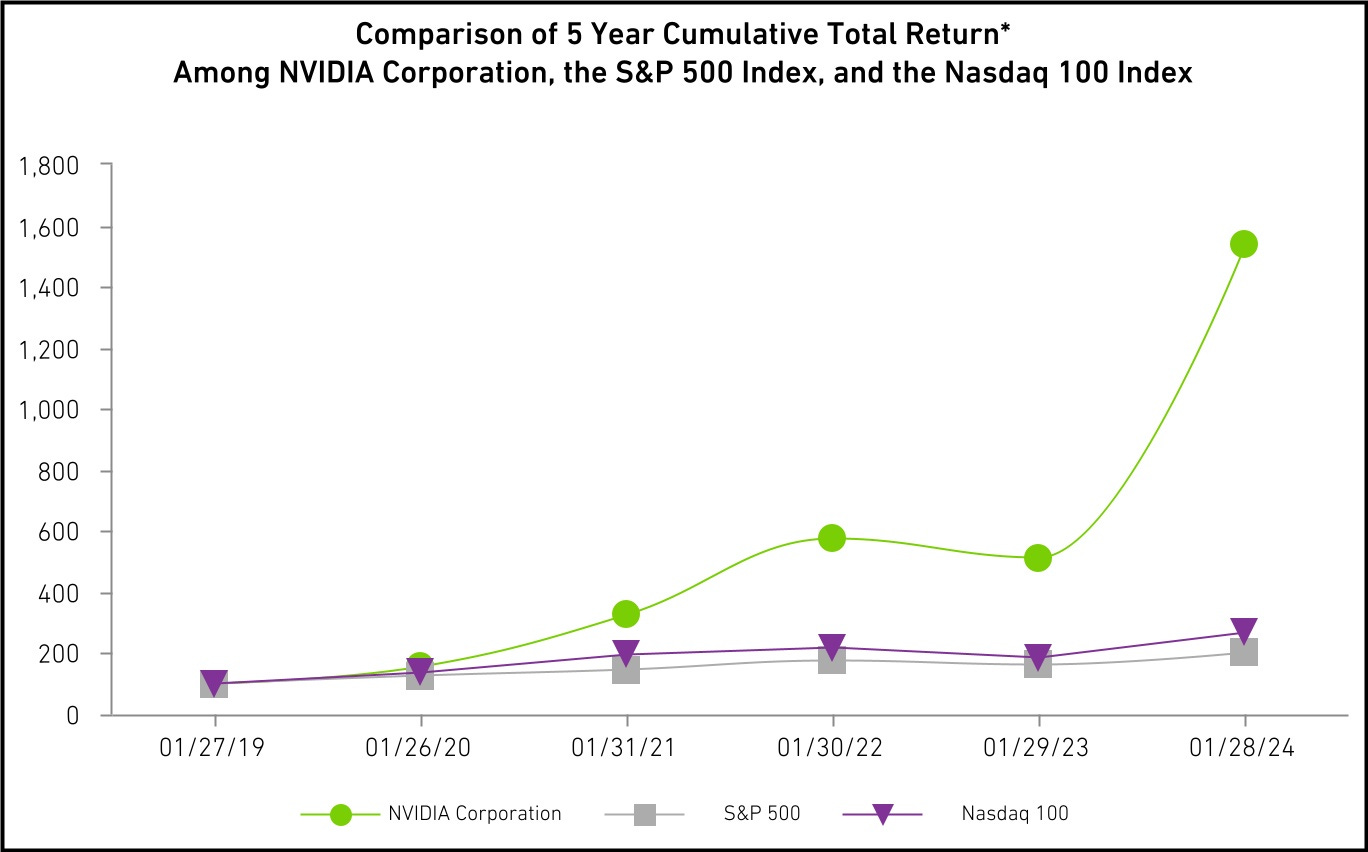

Unsurprisingly, the stock opened on Thursday up 15%, boosting Nvidia’s market cap to nearly $2T.

To contextualize this unprecedented growth, consider this: Nvidia's market cap is now the fourth largest in the world (behind just Apple, Microsoft, and Saudi Aramco), and its worth exceeds that of Intel by 10x! Few companies globally boast annual revenues of $10B+, let alone achieve such substantial revenue gains within such a vast scale (adding close to $34B of revenue in one year alone). Analysts are bullish on Nvidia's future, and expect the company will only continue to have a strong competitive advantage.

One could argue that Nvidia's recent performance marks one of the most impressive runs in the history of technology companies, if not of all mega-cap entities in our generation. But why is that? We all know that Nvidia is taking advantage of the generative AI trends, but how specifically is Nvidia able to achieve such strong performance? In this week's post, we delve into Nvidia's business model and revenue streams, understanding the factors propelling its remarkable revenue and operating growth.

Let’s dig in!

Nvidia’s Main Business Units

Let’s start by understanding how Nvidia operates. Nvidia operates in four large markets: Data Center, Gaming, Professional Visualization, and Automotive. While they operate in these main segments, Nvidia reports revenue and operating margins in two main categories:

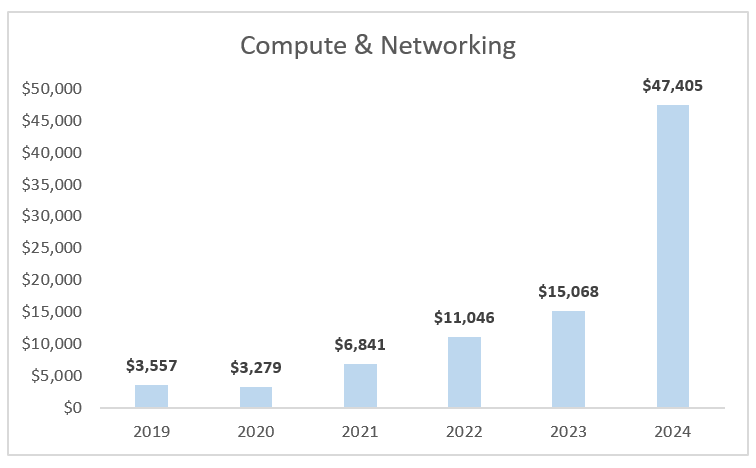

1 - Compute & Networking: This is Nvidia’s largest revenue segment, and includes Nvidia's data center platforms, systems for AI, high-performance computing, and accelerated computing, as well as networking and interconnected solutions, automotive AI cockpit, autonomous driving development agreements, autonomous vehicle solutions, cryptocurrency mining processors, and Jetson for robotics and other embedded platforms.

The compute & networking business unit has been the biggest driver of revenue growth for the overall Nvidia business, growing ~215% YoY from 2023 to 2024, and now making up 78% of Nvidia’s overall revenue. Growth has primarily been driven by demand in Nvidia’s Data Center Accelerated Computing Platform. NVIDIA’s HGX Platform has seen significant demand driven by the increased number of companies performing training and inference of LLMs, recommendation engines, and building generative AI Apps. The HGX platform has also seen accelerating demand from cloud service providers (AWS, Google Cloud, Meta, MSFT, Azure, Oracle) and large consumer internet companies (TikTok, ByteDance). The platform has also seen significant traction in the sovereign AI infrastructure market.

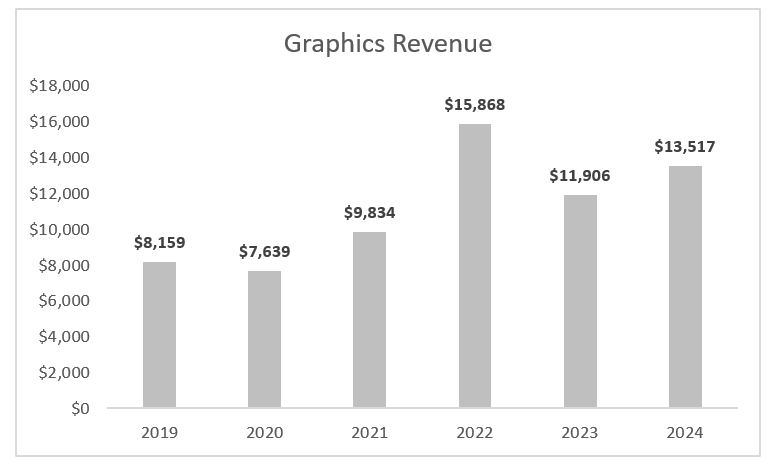

2 - Graphics - The Graphics segment includes GeForce GPUs for gaming and PCs, the GeForce Now game-streaming service, and related infrastructure, as well as solutions for gaming platforms. It also encompasses the Quadro/NVIDIA RTX GPUs for enterprise design, grid software for cloud-based visual and virtual computing, and automotive platforms for infotainment systems.

Historically, the Graphics business made up a majority of the revenue for Nvidia (~70% in 2019 and 2020 and ~60% in 2021 and 222). In 2023, we started to see that shift with ~44% of Revenue coming from graphics and in 2024, that was down to 22%.

Nvidia also operates in the automotive segment (growth primarily driven by autonomous vehicles) and professional visualization (AI imaging in healthcare, edge AI in smart spaces and the public sector).

When looking at the overall business, it’s incredibly impressive to see how Nvidia’s gross margins have significantly expanded, growing from 62% in 2019 to 73% in 2024, which is very rare for a company at this scale. The primary growth in gross margins has been driven by the significant increases in compute & networking. Not only have gross margins improved, but operating margins as well. Nvidia reported $32B of Operating Income in the Compute & Networking business in FY 2024 alone, which represents 530% YoY growth.

No doubt, Nvidia is coming off one of the best years ever for a company at this scale, growing 125%+ while improving gross margins to near-software territory and throwing off billions in cash.

An Infinite Future?

Amidst all the euphoria, its almost unnatural to ask the question: is there anything that can stop Nvidia?

It’s not a bad question to ask, considering that Nvidia has been the beneficiary of past hype cycles (see: crypto) that had subsequently fizzled out. Jensen has publicly stated that he believes we are only in the “second or third inning” of the AI wave, implying that Nvidia has many more years to benefit from selling the picks and shovels to AI companies.

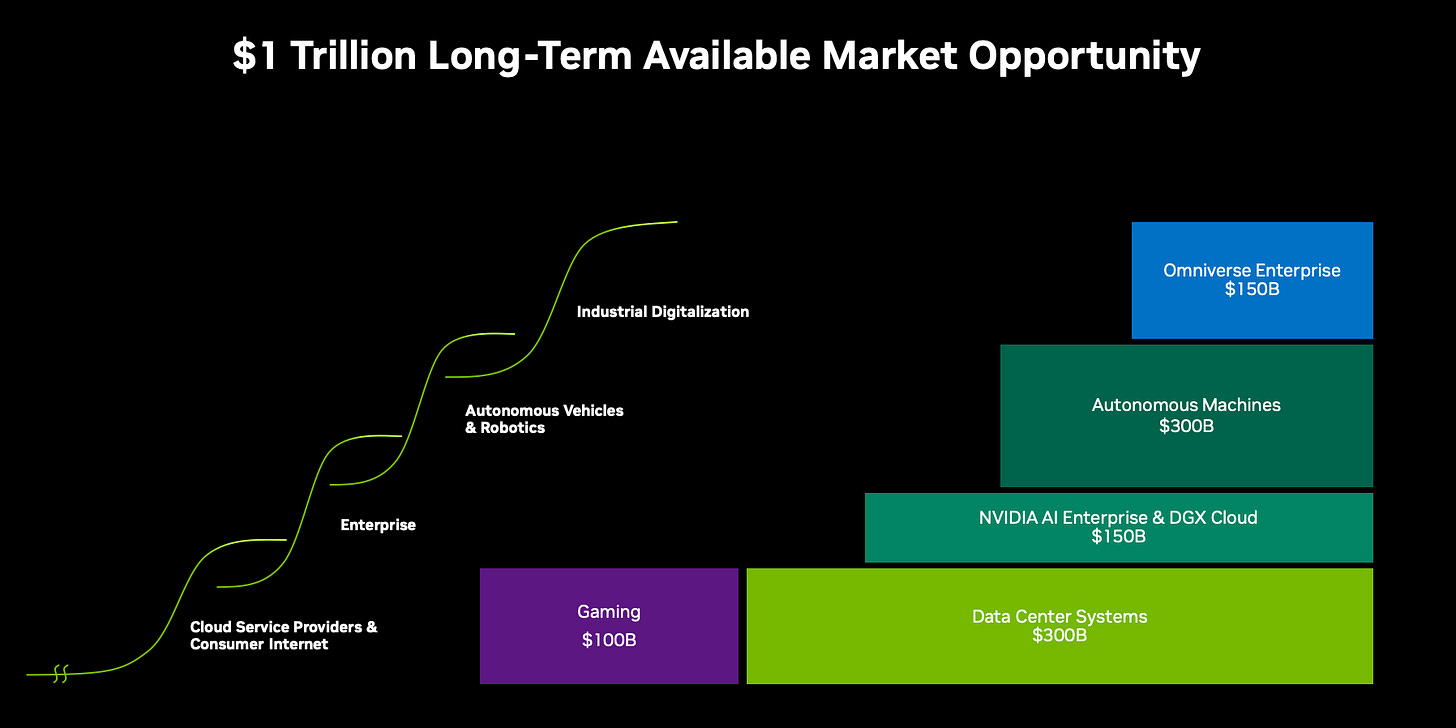

However, Nvidia sees its future as expansive far beyond Data Center Systems and Gaming, the two core cash cows of Nvidia’s business today. Per their Q3’23 investor presentation, Nvidia laid out a slide indicating what they believe is their “$1 Trillion Long-Term Available Market Opportunity”. While this may feel more hand-wavey and futuristic, its not out of the realm of possibility to suggest that in a world where AI penetrates every market, and thus the need for GPUs continues to accelerate, Nvidia’s market opportunity will only continue to increase. For example, 20 out of the top 30 EV makers and 8 out of the top 10 robotaxi makers today are running on Nvidia’s SoCs (systems-on-a-chip). There’s a good chance this number (and the types of products running on Nvidia) will continue to go up.

However, their future is not without risks. We see three main obstacles that lay ahead for Nvidia:

1) Direct Competition from Other Chipmakers

Nvidia’s success in building chips for AI use cases has not gone unnoticed. Several incumbent and emerging chipmakers could pose a threat for Nvidia:

AMD → Nvidia’s closest direct competitor has historically lagged them on both revenue growth and chip performance. However, AMD recently unveiled the Instinct MI300X accelerator and the Instinct M1300A accelerated processing unit (APU), which the company said works to train and run LLMs. CEO Lisa Su claimed that MI300X is comparable to Nvidia’s H100 chips in training LLMs but performs better on the inference side — 1.4 times better than H100 when working with Meta’s Llama 2, a 70 billion parameter LLM. Elon Musk even mentioned he would plan to buy $500M of AMD chips for Tesla. Nvidia remains the darling in AI, but AMD could begin to put a serious dent in their market dominance.

Intel → At one point the largest and most dominant American chip manufacturer, Intel’s ~$190B market cap places it at 1/10th the value of Nvidia, largely due to perceptions that they had missed the AI wave and were overreliant on its flagship CPUs vs. Nvidia’s GPUs. However, in December they released Gaudi3, their answer to Nvidia’s H100 and AMD’s MI300. It remains to be seen how many AI workloads will run on Gaudi3.

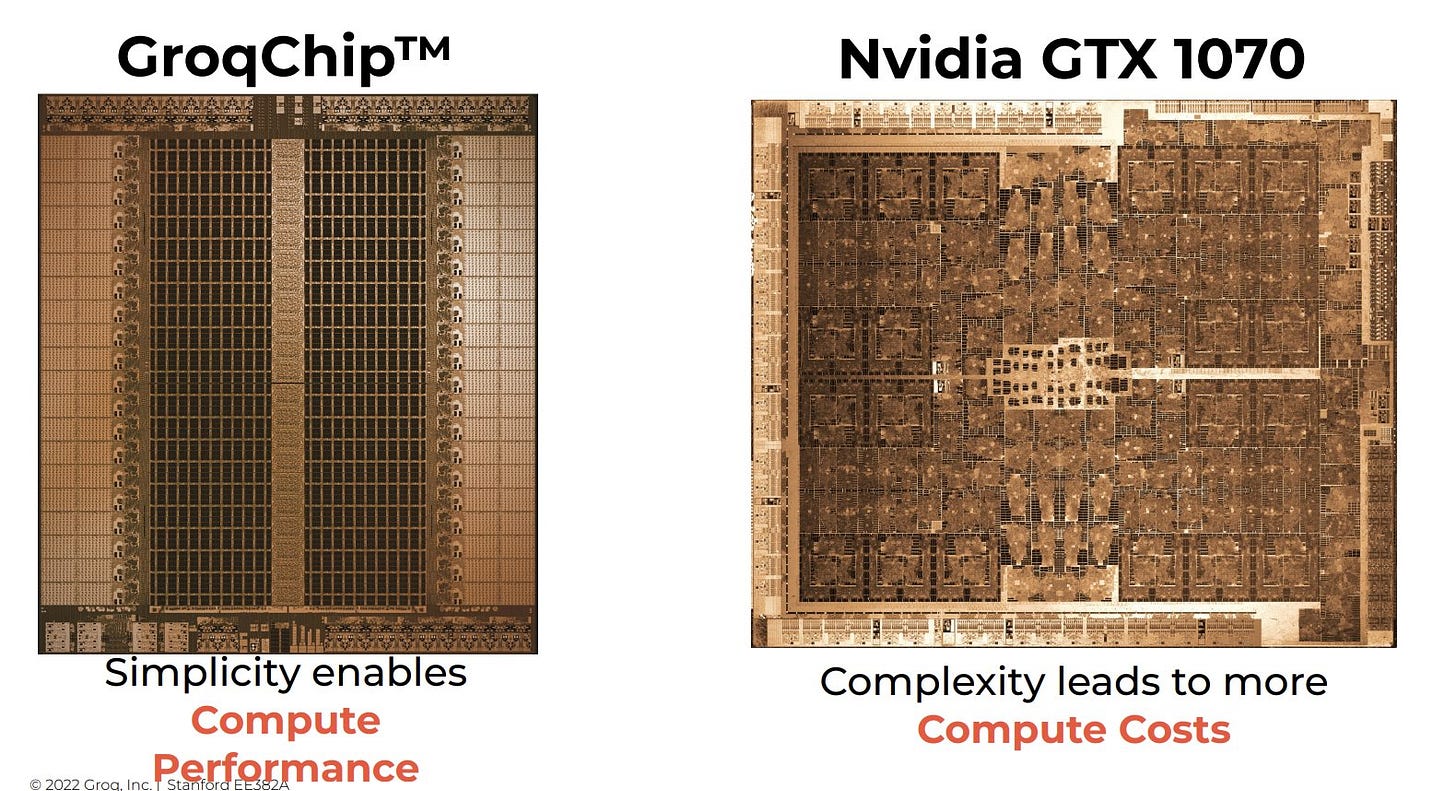

Startups (Groq, Graphcore, Cerebras, etc.) → And it’s not just incumbents that have Nvidia in its crosshairs, but burgeoning startups as well. The Information cites 18 startups working on building AI chips, which have raised $6B+ from investors and are collectively worth $25B. The 8-year old Groq recently made waves providing ultra-fast inference speeds (up to 500 T/s!) using its Language Processing Unit (LPU) designed for models like GPT, Llama, and Mistral LLMs. We fully expect Groq and other emerging startups like Graphcore and Cerebras to increasingly gain a foothold in the market.

2) BigTech Builds In House

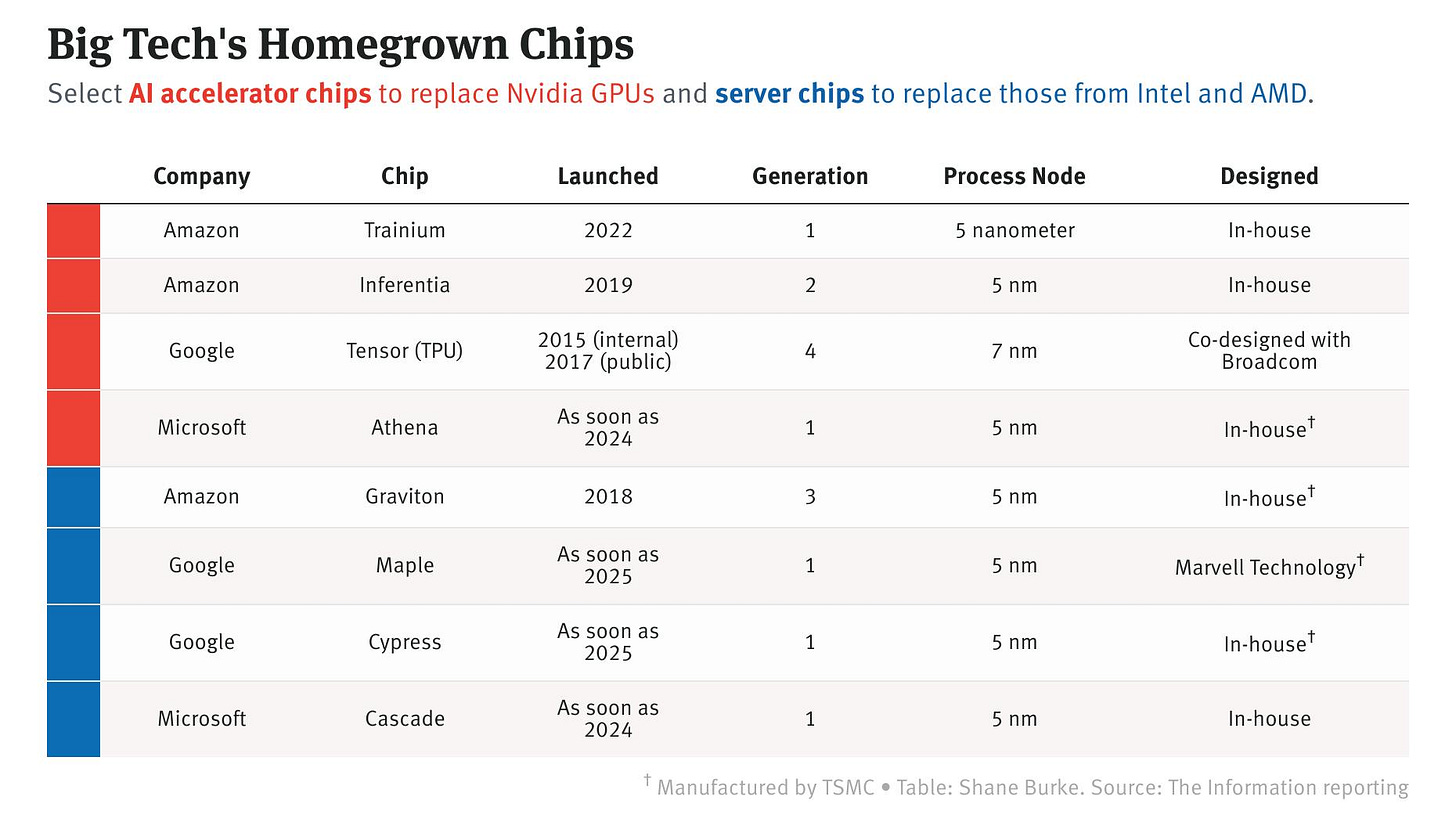

Nvidia also faces pressure from large customers, particularly the hyperscalers (who make up >50% of Nvidia’s AI server chip revenue), that are now looking to build their own AI infrastructure in-house, and lower their reliance on Nvidia.

Just this week, The Information reported that Microsoft is “developing a networking card to ensure that data moves quickly between its servers, as an alternative to one supplied by Nvidia”. Microsoft currently spends billions with Nvidia to power their AI services (Copilot, Bing, OpenAI, etc.), which eats into their gross profits. By building proprietary networking gear, along with developing its own server chips (“Maia”), Microsoft can significantly reduce its dependence on third-party chip manufacturers like Nvidia, and have more control over their own OpEx.

Amazon is doing something similar with AWS Inferentia and homegrown server networking technology rivaling Nvidia’s Infiniband. Google has been investing in tensor processing units (TPUs) for years which can serve up massive compute processing power.

While Microsoft, Amazon, and Google are all strong Nvidia customers and partners today (Jensen even keynoted AWS re:Invent last year!), those relationships could weaken as each realizes how central chips and processing power is to their own AI ambitions.

3) Geopolitical risk

Last but certainly not least, Nvidia is subject to the geopolitical risk that all chipmakers face. One of the primary risks comes from the intensifying US-China tech rivalry, where export controls and sanctions limit Nvidia’s ability to sell its advanced chips to Chinese companies. Last October, the US Department of Commerce announced plans to restrict the export of A800s and H800s (in addition to previous bans on H100s) to China.

Nvidia also relies on TSMC, based in Taiwan, to manufacture virtually all of its chips. The relationship between China and Taiwan remains tense, which in part fueled TSMC’s decision to build new fabs in Arizona. However, those plans have run into obstacles, with workers lacking adequate expertise and reduced incentives delaying the $40B project.

Conclusion

Few companies in the history of capitalism have grown as quickly and meaningfully as Nvidia has in the past 18 months. They have become perhaps the biggest beneficiary of the current AI wave by entrenching themselves as the foundational building block to serve AI. With a massive balance sheet, a singularly talented founder-CEO, and a “trillion dollar market opportunity”, the sky is truly the limit for Nvidia.

Will they continue to dominate the AI market, or will their dominance be eroded by a mix of emerging startups, new technologies, and “friends turning into foes”? No question, it will be an epic ride!

We hope you enjoyed this edition of Aspiring for Intelligence, and we will see you again in two weeks! This is a quickly evolving category, and we welcome any and all feedback around the viewpoints and theses expressed in this newsletter (as well as what you would like us to cover in future writeups). And it goes without saying but if you are building the next great intelligent application and want to chat, drop us a line!